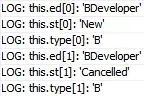

Trying to answer this question Get List of Unique String per Column we ran into a different problem from my dataset. When I import this CSV file to the dataframe every column is OBJECT type, we need to convert the columns that are just number to real (number) dtype and those that are not number to String dtype.

Is there a way to achieve this?

Download the data sample from here

I have tried following code from following article Pandas: change data type of columns but did not work.

df = pd.DataFrame(a, columns=['col1','col2','col3'])

As always thanks for your help