Unfortunately, you are going to have to benchmark this yourself, because the relative performance will depend critically on the actual String values, and also on the relative probability that you will test a string that is not in your mapping. And of course, it depends on how String.equals() and String.hashCode() are implemented, as well as the details of the HashMap and List classes used.

In the case of a HashMap, a lookup will typically involve calculating the hash of the key String, and then comparing the key String with one or more entry key Strings. The hashcode calculation looks at all characters of the String, and is therefore dependent on the key String. The equals operations typically will typically examine all of the characters when equals returns true and considerably less when it returns false. The actual number of times that equals is called for a given key string depends on how the hashed key strings are distributed. Normally, you'd expect an average of 1 or 2 calls to equal for a "hit" and maybe up to 3 for a "miss".

In the case of a List, a lookup will call equals for an average of half the entry key Strings in the case of a "hit" and all of them in the case of a "miss". If you know the relative distribution of the keys that you are looking up, you can improve the performance in the "hit" case by ordering the list. But the "miss" case cannot be optimized.

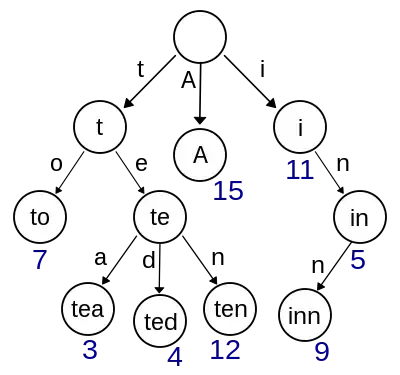

In addition to the trie alternative suggested by @aioobe, you could also implement a specialized String to integer hashmap using a so-called perfect hash function. This maps each of the actual key strings to a unique hash within a small range. The hash can then be used to index an array of key/value pairs. This reduces a lookup to exactly one call to hash function and one call to String.equals. (And if you can assume that supplied key will always be one of the mapped strings, you can dispense with the call to equals.)

The difficulty of the perfect hash approach is in finding a function that works for the set of keys in the mapping and is not too expensive to compute. AFAIK, this has to be done by trial and error.

But the reality is that simply using a HashMap is a safe option, because it gives O(1) performance with a relatively small constant of proportionality (unless the entry keys are pathological).

(FWIW, my guess is that the break-even point where HashMap.get() becomes better than List.contains() is less than 10 entries, assuming that the strings have an average length of 5 to 10.)