I am trying to port MATLAB implementation of MTCNN_face_detection_alignment to python. I use the same version of caffe bindings for MATLAB and for python.

Minimal runnable code to reproduce the issue:

MATLAB:

addpath('f:/Documents/Visual Studio 2013/Projects/caffe/matlab');

warning off all

caffe.reset_all();

%caffe.set_mode_cpu();

caffe.set_mode_gpu();

caffe.set_device(0);

prototxt_dir = './model/det1.prototxt';

model_dir = './model/det1.caffemodel';

PNet=caffe.Net(prototxt_dir,model_dir,'test');

img=imread('F:/ImagesForTest/test1.jpg');

[hs ws c]=size(img)

im_data=(single(img)-127.5)*0.0078125;

PNet.blobs('data').reshape([hs ws 3 1]);

out=PNet.forward({im_data});

imshow(out{2}(:,:,2))

Python:

import numpy as np

import caffe

import cv2

caffe.set_mode_gpu()

caffe.set_device(0)

model = './model/det1.prototxt'

weights = './model/det1.caffemodel'

PNet = caffe.Net(model, weights, caffe.TEST) # create net and load weights

print ("\n\n----------------------------------------")

print ("------------- Network loaded -----------")

print ("----------------------------------------\n")

img = np.float32(cv2.imread( 'F:/ImagesForTest/test1.jpg' ))

img=cv2.cvtColor(img,cv2.COLOR_BGR2RGB)

avg = np.array([127.5,127.5,127.5])

img = img - avg

img = img*0.0078125;

img = img.transpose((2,0,1))

img = img[None,:] # add singleton dimension

PNet.blobs['data'].reshape(1,3,img.shape[2],img.shape[3])

out = PNet.forward_all( data = img )

cv2.imshow('out',out['prob1'][0][1])

cv2.waitKey()

The model I use located here (det1.prototxt and det1.caffemodel)

The image I used to get these results:

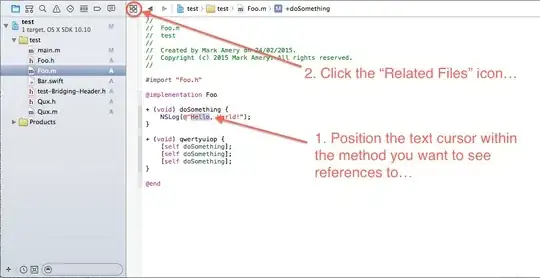

The results I have from both cases:

Results are similar, but not same.

UPD: it looks like not type conversion problem (corrected, but nothing changed). I saved result of convolution after conv1 layer (first channel) in matlab, and extracted the same data in python, both images are now displayed by python cv2.imshow.

Data on input layer (data) are absolutely the same, I did the check using the same method.

And as you can see the difference visible even on the first (conv1) layer. Looks like kernels transformed somehow.

Can anyone say where is the difference hidden ?