Problem: Output Image is too large.

Original Code:-

int N = image1.rows + image2.rows;

int M = image1.cols+image2.cols;

warpPerspective(image1,result,H,cv::Size(N,M)); // Too big size.

cv::Mat half(result,cv::Rect(0,0,image2.rows,image2.cols));

result.copyTo(half);

namedWindow("Result",WINDOW_AUTOSIZE);

imshow( "Result", result);

The result image generated is storing as many rows as in image1 and in image2. However, the output image should be equal to dimension of image1 and image2 - dimension of overlapping area.

Another Problem

Why are you warping image1. Compute H'(inverse matrix of H) and warp image2 using H'. You should be registering image2 onto image1.

Also, study how warpPerspective works. It finds the area ROI to which the image2 will be warped. Next for each pixel in this ROI area of result(say x,y), it finds the corresponding location say (x',y') in the image2. Note: (x', y') can be real values, like (4.5, 5.4).

Some form of interpolation(probably linear interpolation) is used to find the pixel value for (x, y) in image result.

Next, how to find the size of result matrix. Don't use N,M. Use matrix H' and warp image corners to find where they will end

For transformation matrix, see this wiki and http://planning.cs.uiuc.edu/node99.html. Know the difference between rotation, translational, affine and perspective transformation matrix. Then read the opencv docs here.

You can also read on an earlier answer by me. This answer shows simple algebra to find a crop area. You need to adjust the code for the four corners of both images. Note, image pixels of the new image can go to a negative pixel location as well.

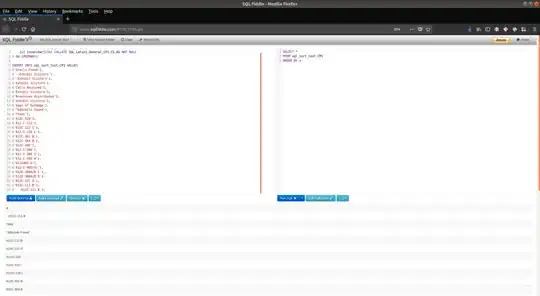

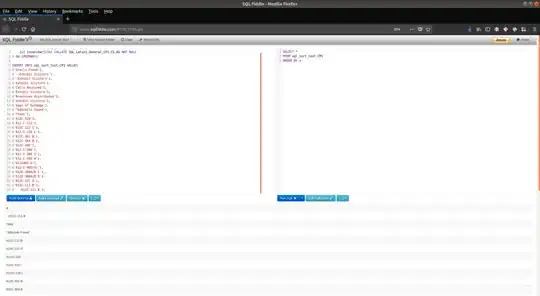

Sample Code(In java):-

import java.util.Iterator;

import java.util.LinkedList;

import java.util.List;

import org.opencv.calib3d.Calib3d;

import org.opencv.core.Core;

import org.opencv.core.CvType;

import org.opencv.core.DMatch;

import org.opencv.core.KeyPoint;

import org.opencv.core.Mat;

import org.opencv.core.MatOfDMatch;

import org.opencv.core.MatOfKeyPoint;

import org.opencv.core.MatOfPoint2f;

import org.opencv.core.Point;

import org.opencv.core.Scalar;

import org.opencv.core.Size;

import org.opencv.features2d.DescriptorExtractor;

import org.opencv.features2d.DescriptorMatcher;

import org.opencv.features2d.FeatureDetector;

import org.opencv.features2d.Features2d;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

public class Driver {

public static void stitchImages() {

// Read as grayscale

Mat grayImage1 = Imgcodecs.imread("current_00000.bmp", 0);

Mat grayImage2 = Imgcodecs.imread("current_00001.bmp", 0);

if (grayImage1.dataAddr() == 0 || grayImage2.dataAddr() == 0) {

System.out.println("Images read unsuccessful.");

return;

}

// Create transformation matrix

Mat transformMatrix = new Mat(3, 3, CvType.CV_32FC1);

// -- Step 1: Detect the keypoints using AKAZE Detector

int minHessian = 400;

MatOfKeyPoint keypoints1 = new MatOfKeyPoint();

MatOfKeyPoint keypoints2 = new MatOfKeyPoint();

FeatureDetector surf = FeatureDetector.create(FeatureDetector.AKAZE);

surf.detect(grayImage1, keypoints1);

surf.detect(grayImage2, keypoints2);

// -- Step 2: Calculate descriptors (feature vectors)

DescriptorExtractor extractor = DescriptorExtractor.create(DescriptorExtractor.AKAZE);

Mat descriptors1 = new Mat();

Mat descriptors2 = new Mat();

extractor.compute(grayImage1, keypoints1, descriptors1);

extractor.compute(grayImage2, keypoints2, descriptors2);

// -- Step 3: Match the keypoints

DescriptorMatcher matcher = DescriptorMatcher.create(DescriptorMatcher.BRUTEFORCE);

MatOfDMatch matches = new MatOfDMatch();

matcher.match(descriptors1, descriptors2, matches);

List<DMatch> myList = new LinkedList<>(matches.toList());

// Filter good matches

double min_dist = Double.MAX_VALUE;

Iterator<DMatch> itr = myList.iterator();

while (itr.hasNext()) {

DMatch element = itr.next();

min_dist = Math.min(element.distance, min_dist);

}

LinkedList<Point> img1GoodPointsList = new LinkedList<Point>();

LinkedList<Point> img2GoodPointsList = new LinkedList<Point>();

List<KeyPoint> keypoints1List = keypoints1.toList();

List<KeyPoint> keypoints2List = keypoints2.toList();

itr = myList.iterator();

while (itr.hasNext()) {

DMatch dMatch = itr.next();

if (dMatch.distance >= 5 * min_dist) {

img1GoodPointsList.addLast(keypoints1List.get(dMatch.queryIdx).pt);

img2GoodPointsList.addLast(keypoints2List.get(dMatch.trainIdx).pt);

} else {

itr.remove();

}

}

matches.fromList(myList);

Mat outputMid = new Mat();

System.out.println("best matches size: " + matches.size());

Features2d.drawMatches(grayImage1, keypoints1, grayImage2, keypoints2, matches, outputMid);

Imgcodecs.imwrite("outputMid - A - A.jpg", outputMid);

MatOfPoint2f img1Locations = new MatOfPoint2f();

img1Locations.fromList(img1GoodPointsList);

MatOfPoint2f img2Locations = new MatOfPoint2f();

img2Locations.fromList(img2GoodPointsList);

// Find the Homography Matrix - Note img2Locations is give first to get

// inverse directly.

Mat hg = Calib3d.findHomography(img2Locations, img1Locations, Calib3d.RANSAC, 3);

System.out.println("hg is: " + hg.dump());

// Find the location of two corners to which Image2 will warp.

Size img1Size = grayImage1.size();

Size img2Size = grayImage2.size();

System.out.println("Sizes are: " + img1Size + ", " + img2Size);

// Store location x,y,z for 4 corners

Mat img2Corners = new Mat(3, 4, CvType.CV_64FC1, new Scalar(0));

Mat img2CornersWarped = new Mat(3, 4, CvType.CV_64FC1);

img2Corners.put(0, 0, 0, img2Size.width, 0, img2Size.width); // x

img2Corners.put(1, 0, 0, 0, img2Size.height, img2Size.height); // y

img2Corners.put(2, 0, 1, 1, 1, 1); // z - all 1

System.out.println("Homography is \n" + hg.dump());

System.out.println("Corners matrix is \n" + img2Corners.dump());

Core.gemm(hg, img2Corners, 1, new Mat(), 0, img2CornersWarped);

System.out.println("img2CornersWarped: " + img2CornersWarped.dump());

// Find the new size to use

int minX = 0, minY = 0; // The grayscale1 already has minimum location at 0

int maxX = 1500, maxY = 1500; // The grayscale1 already has maximum location at 1500(possible 1499, but 1 pixel wont effect)

double[] xCoordinates = new double[4];

img2CornersWarped.get(0, 0, xCoordinates);

double[] yCoordinates = new double[4];

img2CornersWarped.get(1, 0, yCoordinates);

for (int c = 0; c < 4; c++) {

minX = Math.min((int)xCoordinates[c], minX);

maxX = Math.max((int)xCoordinates[c], maxX);

minY = Math.min((int)xCoordinates[c], minY);

maxY = Math.max((int)xCoordinates[c], maxY);

}

int rows = (maxX - minX + 1);

int cols = (maxY - minY + 1);

// Warp to product final output

Mat output1 = new Mat(new Size(cols, rows), CvType.CV_8U, new Scalar(0));

Mat output2 = new Mat(new Size(cols, rows), CvType.CV_8U, new Scalar(0));

Imgproc.warpPerspective(grayImage1, output1, Mat.eye(new Size(3, 3), CvType.CV_32F), new Size(cols, rows));

Imgproc.warpPerspective(grayImage2, output2, hg, new Size(cols, rows));

Mat output = new Mat(new Size(cols, rows), CvType.CV_8U);

Core.addWeighted(output1, 0.5, output2, 0.5, 0, output);

Imgcodecs.imwrite("output.jpg", output);

}

public static void main(String[] args) {

System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

stitchImages();

}

}

Change Descriptor

Move to Akaze from Surf. I have seen perfect image registration just from this.

Output Image

This output uses less space and change of descriptor shows perfect registration.

P.S.: IMHO, coding is awesome, but the real treasure is the fundamental knowledge/concepts.