Save the following HTML as a local file. Something like /tmp/foo.html, then open that in Firefox (I'm on 49.0.2)

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

</head>

<body>

<script src="http://localhost:1234/a.js"></script>

<script src="http://localhost:1234/b.js"></script>

<script src="http://localhost:1234/c.js"></script>

<script src="http://localhost:1234/d.js"></script>

<script src="http://localhost:1234/e.js"></script>

</body>

</html>

I don't have a server running on port 1234, so the requests don't even successfully connect.

The behavior I'd expect here is for all the requests to fail, and be done with it.

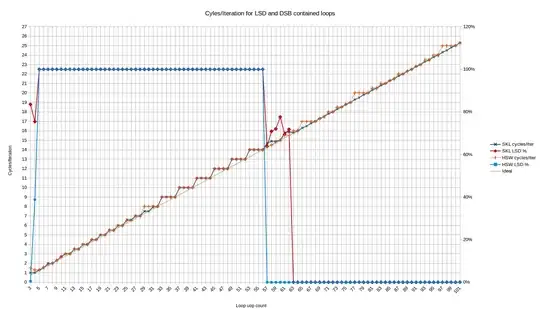

What actually happens in Firefox is all 5 .js files are requested in parallel, they fail to connect, then the last 4 get re-requested in serial. Like so:

Why?

If I boot a server on 1234 that always 404s, the behaviour is the same.

This particular example doesn't reproduce the same behavior in Chrome, but other similar examples is how I originally fell upon this behavior.

EDIT: Here's how I tested this happens when it 404's as well.

$ cd /tmp

$ mkdir empty

$ cd empty

$ python -m SimpleHTTPServer 1234

Then reloaded Firefox. It shows this:

The server actually sees all those requests too (the first 5 arrive out of order because they're requested in parallel, but the last 4 are always b, c, d, e, since they get re-requested in serial).

127.0.0.1 - - [02/Nov/2016 13:25:40] code 404, message File not found

127.0.0.1 - - [02/Nov/2016 13:25:40] "GET /d.js HTTP/1.1" 404 -

127.0.0.1 - - [02/Nov/2016 13:25:40] code 404, message File not found

127.0.0.1 - - [02/Nov/2016 13:25:40] "GET /c.js HTTP/1.1" 404 -

127.0.0.1 - - [02/Nov/2016 13:25:40] code 404, message File not found

127.0.0.1 - - [02/Nov/2016 13:25:40] "GET /b.js HTTP/1.1" 404 -

127.0.0.1 - - [02/Nov/2016 13:25:40] code 404, message File not found

127.0.0.1 - - [02/Nov/2016 13:25:40] "GET /a.js HTTP/1.1" 404 -

127.0.0.1 - - [02/Nov/2016 13:25:40] code 404, message File not found

127.0.0.1 - - [02/Nov/2016 13:25:40] "GET /e.js HTTP/1.1" 404 -

127.0.0.1 - - [02/Nov/2016 13:25:40] code 404, message File not found

127.0.0.1 - - [02/Nov/2016 13:25:40] "GET /b.js HTTP/1.1" 404 -

127.0.0.1 - - [02/Nov/2016 13:25:40] code 404, message File not found

127.0.0.1 - - [02/Nov/2016 13:25:40] "GET /c.js HTTP/1.1" 404 -

127.0.0.1 - - [02/Nov/2016 13:25:40] code 404, message File not found

127.0.0.1 - - [02/Nov/2016 13:25:40] "GET /d.js HTTP/1.1" 404 -

127.0.0.1 - - [02/Nov/2016 13:25:40] code 404, message File not found

127.0.0.1 - - [02/Nov/2016 13:25:40] "GET /e.js HTTP/1.1" 404 -