How can i combine a dataframe with a single column(Description) with another dataframe having 2 columns (Name, Caption), so that my resultant dataframe will contain 3 columns(Name,Caption,Description)

Asked

Active

Viewed 1,155 times

0

-

I tried join, but it results in Cartesian join. All the names and Captions mapped to all descriptions – Seena V P Nov 07 '16 at 04:40

-

Is there a key for the join ? How do you determine which description matches which name ? – Ramachandran.A.G Nov 07 '16 at 05:06

-

no key for joining. first name matches with first description,second one matches with second description and so on – Seena V P Nov 07 '16 at 05:34

-

please share your views. i am really stuck at this point. Please some one help me out of this – Seena V P Nov 07 '16 at 08:45

-

See http://stackoverflow.com/questions/32882529/how-to-zip-twoor-more-dataframe-in-spark. – Alexey Romanov Nov 07 '16 at 09:27

1 Answers

0

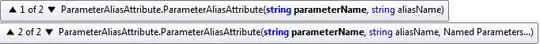

I am providing a solution in scala. Now i can add this as a comment , but for the formatting and image that i am attaching , providing this as an answer. I am pretty much sure that there must be an equivalent in Java as well for this

val nameCaptionDataFrame = Seq(("name1","caption1"),("name2","caption2"),("name3","caption3"),("name4","caption4")).toDF("name","caption")

val descriptionDataFrame = List("desc1","desc2","desc3","desc4").toDF("description")

val nameCaptionDataFrameWithId = nameCaptionDataFrame.withColumn("nameId",monotonically_increasing_id())

nameCaptionDataFrameWithId.show

val descriptionDataFrameId = descriptionDataFrame.withColumn("descId",monotonically_increasing_id())

descriptionDataFrameId.show

nameCaptionDataFrameWithId.join(descriptionDataFrameId, nameCaptionDataFrameWithId.col("nameId") === descriptionDataFrameId.col("descId")).show

Here is the sample output of this piece of code . I hope you will be able to take the idea from here (API's are consistent i assume) and do this in Java

** EDITS IN JAVA ** A "translation" of the code would look similar to this.

/**

* Created by RGOVIND on 11/8/2016.

*/

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.PairFunction;

import org.apache.spark.sql.*;

import scala.Tuple2;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

public class SparkMain {

static public void main(String[] args) {

SparkConf conf = new SparkConf().setMaster("local").setAppName("Stack Overflow App");

JavaSparkContext sc = new JavaSparkContext(conf);

SQLContext sqlContext = new SQLContext(sc);

List<Tuple2<String, String>> tuples = new ArrayList<Tuple2<String, String>>();

tuples.add(new Tuple2<String, String>("name1", "caption1"));

tuples.add(new Tuple2<String, String>("name3", "caption2"));

tuples.add(new Tuple2<String, String>("name3", "caption3"));

List<String> descriptions = Arrays.asList(new String[]{"desc1" , "desc2" , "desc3"});

Encoder<Tuple2<String, String>> nameCaptionEncoder = Encoders.tuple(Encoders.STRING(), Encoders.STRING());

Dataset<Tuple2<String, String>> nameValueDataSet = sqlContext.createDataset(tuples, nameCaptionEncoder);

Dataset<String> descriptionDataSet = sqlContext.createDataset(descriptions, Encoders.STRING());

Dataset<Row> nameValueDataSetWithId = nameValueDataSet.toDF("name","caption").withColumn("id",functions.monotonically_increasing_id()).select("*");

Dataset<Row> descriptionDataSetId = descriptionDataSet.withColumn("id",functions.monotonically_increasing_id()).select("*");

nameValueDataSetWithId.join(descriptionDataSetId ,"id").show();

}

}

This prints the below. Hope this helps

Ramachandran.A.G

- 4,788

- 1

- 12

- 24

-

Tnaks for your reply. But i have an issue. "The method withColumn(String, Column) in the type Dataset

is not applicable for the arguments (String, MonotonicallyIncreasingID)". withColumn function not supporting MonotonicallyIncreasingID

– Seena V P Nov 07 '16 at 12:32 -

@SVP added a java sample. I am not 100% sure on performance or running this on a cluster. Works on my standalone node – Ramachandran.A.G Nov 08 '16 at 10:16