I am not understanding what is going wrong in here.

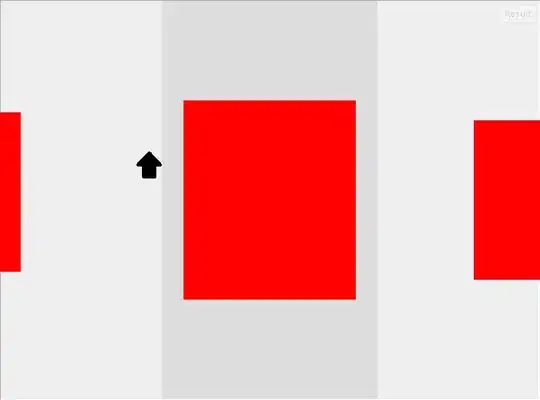

The ERROR I'm having is the And(&&) operator is not working, everything is being directed to else. If I dont use the And(&&) operator only then some of the if condition works. Please look at the column age and ageGroup below, compare them with the UDF declaration. How age 6 and 7 are adults and 20 is a kid?

Here is my code:

All Spark imports and initializations

import org.apache.spark.{ SparkConf, SparkContext }

import org.apache.spark.sql.types._

import org.apache.spark.sql._

import org.apache.spark.sql.functions._

import org.apache.spark.sql.functions.udf

case class Person(name: String, address:String, state, age:Int, phone:Int, order:String)

val df = Seq(

("adnan", "migi way", "texas", 10, 333, "AX-1"),

("dim", "gigi way", "utah", 6,222, "AX-2"),

("alvee", "sigi way", "utah", 9,222, "AX-2"),

("john", "higi way", "georgia", 20,111, "AX- 3")).toDF("name","address","state","age","phone", "order")

val df1 = datafile.map(_.split("\\|")).map(attr => Person(attr(0).toString, attr(1).toString, attr(2).toString, attr(3).toInt, attr(4).toInt, attr(5).toString)).toDF()

UDF Code below

def ageFilter = udf((age: Int) => {

if (age >= 2 && age <= 9) "bacha"

if (age >= 10 ) "kiddo"

else "adult"

})

Calling the UDF

val one_hh_ages = df1.withColumn("ageGroup", ageFilter($"age"))

This is where I took help from: Apache Spark, add an "CASE WHEN ... ELSE ..." calculated column to an existing DataFrame