I have the hadoop cluster. Now i want to install the pig and hive on another machines as a client. The client machine will not be a part of that cluster so is it possible? if possible then how i connect that client machine with cluster?

Asked

Active

Viewed 2,532 times

1 Answers

1

First of all, If you have Hadoop cluster then you must have Master node(Namenode) + Slave node(DataNode)

The one another thing is Client node.

The working of Hadoop cluster is:

Here Namenode and Datanode forms Hadoop Cluster, Client submits job to Namenode.

To achieve this, Client should have same copy of Hadoop Distribution and configuration which is present at Namenode. Then Only Client will come to know on which node Job tracker is running, and IP of Namenode to access HDFS data.

Go to Link1 Link2 for client configuration.

According to your question

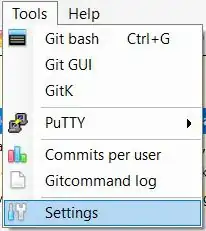

After complete Hadoop cluster configuration(Master+slave+client). You need to do following steps :

- Install Hive and Pig on Master Node

- Install Hive and Pig on Client Node

- Now Start Coding pig/hive on client node.

Feel free to comment if doubt....!!!!!!

Community

- 1

- 1

Ankur Singh

- 926

- 2

- 9

- 19

-

Thank you Mr. Ankur. I have another doubt. If i have to R tool with Hadoop Cluster so what i have to do? – Abhishek Vaidya Nov 11 '16 at 15:57

-

If you got answer to above question. Mark as correct Answer. – Ankur Singh Nov 11 '16 at 16:02

-

You need to install RHadoop on your cluster for connecting R tool with Hadoop cluster. – Ankur Singh Nov 11 '16 at 16:03

-

I will give you more descriptive answer if you ask another question related to R connect with Hadoop. – Ankur Singh Nov 11 '16 at 16:04

-

Sure Mr. Ankur right now i am focusing to learn the Hadoop as well as R programming. While time of actual implementation I will definitely ask you about it as i think i have to suffer a lot. – Abhishek Vaidya Nov 13 '16 at 07:39

-

Ok I will help you. – Ankur Singh Nov 13 '16 at 07:51