As the title states, how does one initialize the variables from variable_scope in Tensorflow? I was not aware that it was necessary since I thought it was a constant. However, when I try to predict the output when running the session on Android, I get the error:

Error during inference: Failed precondition: Attempting to use uninitialized value weights0

[[Node: weights0/read = Identity[T=DT_FLOAT, _class=["loc:@weights0"], _device="/job:localhost/replica:0/task:0/cpu:0"](weights0)]]

I tried setting the variables with tf.Variable (i.e. 'h1': tf.Variable(vs.get_variable("weights0", [n_input, n_hidden_1], initializer=tf.contrib.layers.xavier_initializer()))) but I get the error `tensor name 'Variable' not found in checkpoint files when attempting to generate the protobuf file.

Snippet

def reg_perceptron(t, weights, biases):

t = tf.nn.relu(tf.add(tf.matmul(t, weights['h1']), biases['b1']), name = "layer_1")

t = tf.nn.sigmoid(tf.add(tf.matmul(t, weights['h2']), biases['b2']), name = "layer_2")

t = tf.add(tf.matmul(t, weights['hOut'], name="LOut_MatMul"), biases['bOut'], name="LOut_Add")

return tf.reshape(t, [-1], name="Y_GroundTruth")

g = tf.Graph()

with g.as_default():

...

rg_weights = {

'h1': vs.get_variable("weights0", [n_input, n_hidden_1], initializer=tf.contrib.layers.xavier_initializer()),

'h2': vs.get_variable("weights1", [n_hidden_1, n_hidden_2], initializer=tf.contrib.layers.xavier_initializer()),

'hOut': vs.get_variable("weightsOut", [n_hidden_2, 1], initializer=tf.contrib.layers.xavier_initializer())

}

rg_biases = {

'b1': vs.get_variable("bias0", [n_hidden_1], initializer=init_ops.constant_initializer(bias_start)),

'b2': vs.get_variable("bias1", [n_hidden_2], initializer=init_ops.constant_initializer(bias_start)),

'bOut': vs.get_variable("biasOut", [1], initializer=init_ops.constant_initializer(bias_start))

}

pred = reg_perceptron(_x, rg_weights, rg_biases)

...

...

g_2 = tf.Graph()

with g_2.as_default():

...

rg_weights_2 = {

'h1': vs.get_variable("weights0", [n_input, n_hidden_1], initializer=tf.contrib.layers.xavier_initializer()),

'h2': vs.get_variable("weights1", [n_hidden_1, n_hidden_2], initializer=tf.contrib.layers.xavier_initializer()),

'hOut': vs.get_variable("weightsOut", [n_hidden_2, 1], initializer=tf.contrib.layers.xavier_initializer())

}

rg_biases_2 = {

'b1': vs.get_variable("bias0", [n_hidden_1], initializer=init_ops.constant_initializer(bias_start)),

'b2': vs.get_variable("bias1", [n_hidden_2], initializer=init_ops.constant_initializer(bias_start)),

'bOut': vs.get_variable("biasOut", [1], initializer=init_ops.constant_initializer(bias_start))

}

pred_2 = reg_perceptron(_x_2, rg_weights_2, rg_biases_2)

...

EDIT

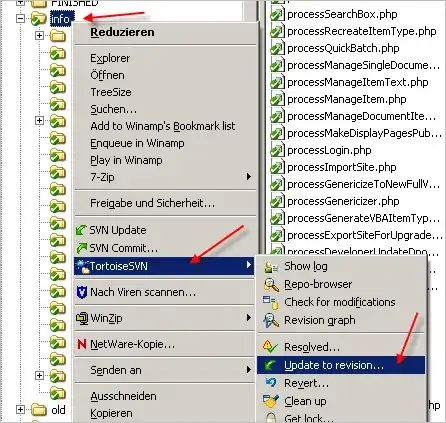

Could I be creating the protobuf file the wrong way? The code that I use for .PB generation which can be found here returns

(Here the blue line represents the target values whereas the green line shows the predicted values.)

instead (from http://pastebin.com/RUFa9NkN) despite both codes using the same inputs and model.