Edit 2 - To further elaborate on the "lack of performance penalty" part due to popular demand:

...I would've expected there to be a considerable decline in computing

speed as more threads were added, but the results don't seem to show

that.

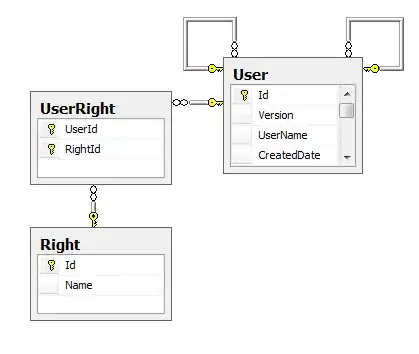

I made this giant chart in order to better illustrate the scaling.

To explain the results:

The blue bar illustrates the total time to do all the tosses. Although that time decreases all the way up to 256 threads, the gains from doubling the thread count gets smaller and smaller. The CPU I ran this test had 4 physical and 8 logical cores. Scaling is pretty good all the way to 4 cores and decent to 8 cores, and then it plummets. Pipeline saturation allows to get minor gains all the way to 256 but it is simply not worth it.

The red bar illustrates the time per toss. It is nearly identical for 1 and 2 threads, as the CPU pipeline hasn't reached full saturation yet. It gets a minor hit at 4 threads, it still runs fine but now the pipeline is saturated, and at 8 threads it really shows that logical threads are not the same thing as physical, that gets progressively worse pushing above 8 threads.

The green bar illustrates the overhead, or how much lower actual performance is relative to the expected double boost from doubling the threads. Pushing above the available logical cores causes the overhead to skyrocket. Note that this is mostly thread synchronization, the actual thread scheduling overhead is probably constant after a given point, there is a minimal window of activity time a thread must receive, which explains why the thread switching doesn't come to the point of overwhelming the work throughput. In fact there is no severe performance drop all the way to 4k threads, which is expected as modern systems have to be able and often run over thousand threads in parallel. And again, most of that drop is due to thread synchronization, not thread switching.

The black outline bar illustrates the time difference relative to the lowest time. At 8 threads we only lose ~14% of absolute performance from not having the pipeline oversaturated, which is a good thing because it is most cases not really worth stressing the entire system over so little. It also shows that 1 thread is only ~6 times slower than the maximum the CPU can pull off. Which gives a figure of how good logical cores are compared to physical cores, 100% extra logical cores give a 50% boost in performance, in this use case a logical thread is ~50% as good as a physical thread, which also correlates to the ~47% boost we see going from 4 to 8. Note that this is a very simply workload though, in more demanding cases it is close to 20-25% for this particular CPU, and in some edge cases there is actually a performance hit.

Edit 1 - I foolishly forgot to isolate the computational workload from the thread synchronization workload.

Running the test with little to no work reveals that for high thread counts the thread management part takes the bulk of the time. Thus the thread switching penalty is indeed very small and possibly after a certain point a constant.

And it would make a lot of sense if you put yourself in the shoes of a thread scheduler maker. The scheduler can easily be protected from being choked by an unreasonably high switching to working ratio, so there is likely a minimal window of time the scheduler will give to a thread before switching to another, while the rest are put on hold. This ensures that the the switching to working ratio will never exceed the limits of what is reasonable. It would be much better to stall other threads than go crazy with thread switching, as the CPU will mostly be switching and doing very little actual work.

The optimal thread count is the available amount of logical CPU cores. This achieves optimal pipeline saturation.

If you use more you will suffer performance degradation due to the cost of thread context switching. The more threads, the more penalty.

If you use less, you will not be utilizing the full hardware potential.

There is also the problem of workload graining, which is very important when you utilize synchronization such as a mutex. If your concurrency is too finely grained you can experience performance drops even when going from 1 to 2 threads on a 8 thread machine. You'd want to reduce synchronization as much as possible, doing as much work as possible in between synchronizations, otherwise you can experience huge performance drops.

Note the difference between physical and logical CPU core. Processors with hyper-threading can have more than one logical core per physical core. "Secondary" logical cores do not have the same computational power as the "primary" as they are merely used to utilize vacancies in the processor pipeline usage.

For example, if you have a 4 core 8 thread CPU, in the case of a perfectly scaling workload you will see 4 times increase of performance going from 1 to 4 threads, but a lot less going from 4 to 8 threads, as evident from vu1p3n0x's answer.

You can look here for ways to determine the number of available CPU cores.