In trying to learn a bit about Tensorflow, I had been building a Variational Auto Encoder, which is working, however I noticed that, after training, I was getting different results from the decoders which are sharing the same variables.

I created two decoders, because the first I train against my dataset, the second I want to eventually feed a new Z encoding in order to produce new values.

My check is that I shoud be able to send the Z values generated from the encoding process to both decoders and get equal results.

I have 2 Decoders (D, D_new). D_new shares the variable scope from D.

before training, I can send values into the Encoder (E) to generate output values as well as the Z values it generated (Z_gen).

if I use Z_gen as input to D_new before training then its output is identical to the output of D, which is expected.

After a few iterations of training, however, the output of D compared with D_new begins to diverge (although they are quite similar).

I have paired this down to a more simple version of my code which still reproduces the error. I'm wondering if others have found this to be the case and where I might be able to correct for it.

The below code can be run in a jupyter notebook. I'm using Tensorflow r0.11 and Python 3.5.0

import numpy as np

import tensorflow as tf

import matplotlib

import matplotlib.pyplot as plt

import os

import pylab as pl

mgc = get_ipython().magic

mgc(u'matplotlib inline')

pl.rcParams['figure.figsize'] = (8.0, 5.0)

##-- Helper function Just for visualizing the data

def plot_values(values, file=None):

t = np.linspace(1.0,len(values[0]),len(values[0]))

for i in range(len(values)):

plt.plot(t,values[i])

if file is None:

plt.show()

else:

plt.savefig(file)

plt.close()

def encoder(input, n_hidden, n_z):

with tf.variable_scope("ENCODER"):

with tf.name_scope("Hidden"):

n_layer_inputs = input.get_shape()[1].value

n_layer_outputs = n_hidden

with tf.name_scope("Weights"):

w = tf.get_variable(name="E_Hidden", shape=[n_layer_inputs, n_layer_outputs], dtype=tf.float32)

with tf.name_scope("Activation"):

a = tf.tanh(tf.matmul(input,w))

prevLayer = a

with tf.name_scope("Z"):

n_layer_inputs = prevLayer.get_shape()[1].value

n_layer_outputs = n_z

with tf.name_scope("Weights"):

w = tf.get_variable(name="E_Z", shape=[n_layer_inputs, n_layer_outputs], dtype=tf.float32)

with tf.name_scope("Activation"):

Z_gen = tf.matmul(prevLayer,w)

return Z_gen

def decoder(input, n_hidden, n_outputs, reuse=False):

with tf.variable_scope("DECODER", reuse=reuse):

with tf.name_scope("Hidden"):

n_layer_inputs = input.get_shape()[1].value

n_layer_outputs = n_hidden

with tf.name_scope("Weights"):

w = tf.get_variable(name="D_Hidden", shape=[n_layer_inputs, n_layer_outputs], dtype=tf.float32)

with tf.name_scope("Activation"):

a = tf.tanh(tf.matmul(input,w))

prevLayer = a

with tf.name_scope("OUTPUT"):

n_layer_inputs = prevLayer.get_shape()[1].value

n_layer_outputs = n_outputs

with tf.name_scope("Weights"):

w = tf.get_variable(name="D_Output", shape=[n_layer_inputs, n_layer_outputs], dtype=tf.float32)

with tf.name_scope("Activation"):

out = tf.sigmoid(tf.matmul(prevLayer,w))

return out

Here is where the Tensorflow graph is setup:

batch_size = 3

n_inputs = 100

n_hidden_nodes = 12

n_z = 2

with tf.variable_scope("INPUT_VARS"):

with tf.name_scope("X"):

X = tf.placeholder(tf.float32, shape=(None, n_inputs))

with tf.name_scope("Z"):

Z = tf.placeholder(tf.float32, shape=(None, n_z))

Z_gen = encoder(X,n_hidden_nodes,n_z)

D = decoder(Z_gen, n_hidden_nodes, n_inputs)

D_new = decoder(Z, n_hidden_nodes, n_inputs, reuse=True)

with tf.name_scope("COST"):

loss = -tf.reduce_mean(X * tf.log(1e-6 + D) + (1-X) * tf.log(1e-6 + 1 - D))

train_step = tf.train.AdamOptimizer(0.001, beta1=0.5).minimize(loss)

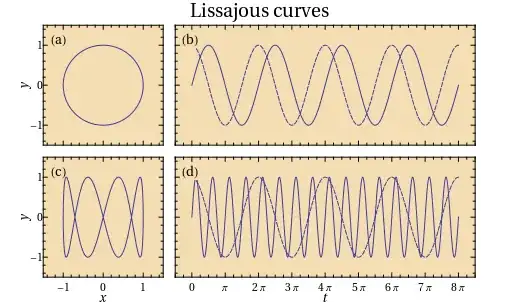

I'm generating a training set of 3 samples of normal distribution noise with 100 data points and then sort it to more easily visualize:

train_data = (np.random.normal(0,1,(batch_size,n_inputs)) + 3) / 6.0

train_data.sort()

plot_values(train_data)

startup the session:

sess = tf.InteractiveSession()

sess.run(tf.group(tf.initialize_all_variables(), tf.initialize_local_variables()))

Lets just look at what the network initially generates before training...

resultA, Z_vals = sess.run([D, Z_gen], feed_dict={X:train_data})

plot_values(resultA)

Pulling the Z generated values and feeding them to D_new which is reusing the variables from D:

resultB = sess.run(D_new, feed_dict={Z:Z_vals})

plot_values(resultB)

Just for sanity I'll plot the difference between the two to be sure they're the same...

Now run 1000 training epochs and plot the result...

for i in range(1000):

_, resultA, Z_vals = sess.run([train_step, D, Z_gen], feed_dict={X:train_data})

plot_values(resultA)

Now lets feed those same Z values to D_new and plot those results...

resultB = sess.run(D_new, feed_dict={Z:Z_vals})

plot_values(resultB)

They look pretty similar. But (I think) they should be exactly the same. Let's look at the difference...

plot_values(resultA - resultB)

You can see there is some variation now. This becomes much more dramatic with a larger network on more complex data, but still shows up in this simple example. Any clues as to what's going on?