I have personally found some errors in when using unix_timestamp based date converstions from dd-MMM-yyyy format to yyyy-mm-dd, using spark 1.6, but this may extend into recent versions. Below I explain a way to solve the problem using java.time that should work in all versions of spark:

I've seen errors when doing:

from_unixtime(unix_timestamp(StockMarketClosingDate, 'dd-MMM-yyyy'), 'yyyy-MM-dd') as FormattedDate

Below is code to illustrate the error, and my solution to fix it.

First I read in stock market data, in a common standard file format:

import sys.process._

import org.apache.spark.sql.SQLContext

import org.apache.spark.sql.functions.udf

import org.apache.spark.sql.types.{StructType, StructField, StringType, IntegerType, DateType}

import sqlContext.implicits._

val EODSchema = StructType(Array(

StructField("Symbol" , StringType, true), //$1

StructField("Date" , StringType, true), //$2

StructField("Open" , StringType, true), //$3

StructField("High" , StringType, true), //$4

StructField("Low" , StringType, true), //$5

StructField("Close" , StringType, true), //$6

StructField("Volume" , StringType, true) //$7

))

val textFileName = "/user/feeds/eoddata/INDEX/INDEX_19*.csv"

// below is code to read using later versions of spark

//val eoddata = spark.read.format("csv").option("sep", ",").schema(EODSchema).option("header", "true").load(textFileName)

// here is code to read using 1.6, via, "com.databricks:spark-csv_2.10:1.2.0"

val eoddata = sqlContext.read

.format("com.databricks.spark.csv")

.option("header", "true") // Use first line of all files as header

.option("delimiter", ",") //.option("dateFormat", "dd-MMM-yyyy") failed to work

.schema(EODSchema)

.load(textFileName)

eoddata.registerTempTable("eoddata")

And here is the date conversions having issues:

%sql

-- notice there are errors around the turn of the year

Select

e.Date as StringDate

, cast(from_unixtime(unix_timestamp(e.Date, "dd-MMM-yyyy"), 'YYYY-MM-dd') as Date) as ProperDate

, e.Close

from eoddata e

where e.Symbol = 'SPX.IDX'

order by cast(from_unixtime(unix_timestamp(e.Date, "dd-MMM-yyyy"), 'YYYY-MM-dd') as Date)

limit 1000

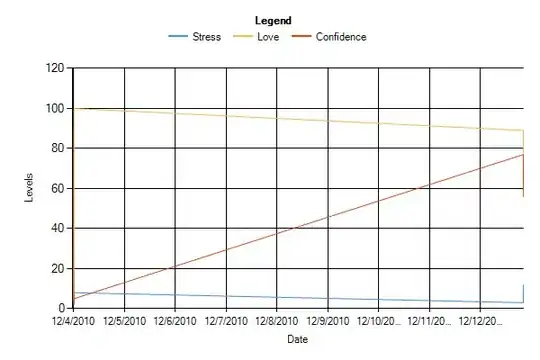

A chart made in zeppelin shows spikes, which are errors.

and here is the check that shows the date conversion errors:

// shows the unix_timestamp conversion approach can create errors

val result = sqlContext.sql("""

Select errors.* from

(

Select

t.*

, substring(t.OriginalStringDate, 8, 11) as String_Year_yyyy

, substring(t.ConvertedCloseDate, 0, 4) as Converted_Date_Year_yyyy

from

( Select

Symbol

, cast(from_unixtime(unix_timestamp(e.Date, "dd-MMM-yyyy"), 'YYYY-MM-dd') as Date) as ConvertedCloseDate

, e.Date as OriginalStringDate

, Close

from eoddata e

where e.Symbol = 'SPX.IDX'

) t

) errors

where String_Year_yyyy <> Converted_Date_Year_yyyy

""")

//df.withColumn("tx_date", to_date(unix_timestamp($"date", "M/dd/yyyy").cast("timestamp")))

result.registerTempTable("SPX")

result.cache()

result.show(100)

result: org.apache.spark.sql.DataFrame = [Symbol: string, ConvertedCloseDate: date, OriginalStringDate: string, Close: string, String_Year_yyyy: string, Converted_Date_Year_yyyy: string]

res53: result.type = [Symbol: string, ConvertedCloseDate: date, OriginalStringDate: string, Close: string, String_Year_yyyy: string, Converted_Date_Year_yyyy: string]

+-------+------------------+------------------+-------+----------------+------------------------+

| Symbol|ConvertedCloseDate|OriginalStringDate| Close|String_Year_yyyy|Converted_Date_Year_yyyy|

+-------+------------------+------------------+-------+----------------+------------------------+

|SPX.IDX| 1997-12-30| 30-Dec-1996| 753.85| 1996| 1997|

|SPX.IDX| 1997-12-31| 31-Dec-1996| 740.74| 1996| 1997|

|SPX.IDX| 1998-12-29| 29-Dec-1997| 953.36| 1997| 1998|

|SPX.IDX| 1998-12-30| 30-Dec-1997| 970.84| 1997| 1998|

|SPX.IDX| 1998-12-31| 31-Dec-1997| 970.43| 1997| 1998|

|SPX.IDX| 1998-01-01| 01-Jan-1999|1229.23| 1999| 1998|

+-------+------------------+------------------+-------+----------------+------------------------+

FINISHED

After this result, I switched to java.time conversions with a UDF like this, which worked for me:

// now we will create a UDF that uses the very nice java.time library to properly convert the silly stockmarket dates

// start by importing the specific java.time libraries that superceded the joda.time ones

import java.time.LocalDate

import java.time.format.DateTimeFormatter

// now define a specific data conversion function we want

def fromEODDate (YourStringDate: String): String = {

val formatter = DateTimeFormatter.ofPattern("dd-MMM-yyyy")

var retDate = LocalDate.parse(YourStringDate, formatter)

// this should return a proper yyyy-MM-dd date from the silly dd-MMM-yyyy formats

// now we format this true local date with a formatter to the desired yyyy-MM-dd format

val retStringDate = retDate.format(DateTimeFormatter.ISO_LOCAL_DATE)

return(retStringDate)

}

Now I register it as a function for use in sql:

sqlContext.udf.register("fromEODDate", fromEODDate(_:String))

and check the results, and rerun test:

val results = sqlContext.sql("""

Select

e.Symbol as Symbol

, e.Date as OrigStringDate

, Cast(fromEODDate(e.Date) as Date) as ConvertedDate

, e.Open

, e.High

, e.Low

, e.Close

from eoddata e

order by Cast(fromEODDate(e.Date) as Date)

""")

results.printSchema()

results.cache()

results.registerTempTable("results")

results.show(10)

results: org.apache.spark.sql.DataFrame = [Symbol: string, OrigStringDate: string, ConvertedDate: date, Open: string, High: string, Low: string, Close: string]

root

|-- Symbol: string (nullable = true)

|-- OrigStringDate: string (nullable = true)

|-- ConvertedDate: date (nullable = true)

|-- Open: string (nullable = true)

|-- High: string (nullable = true)

|-- Low: string (nullable = true)

|-- Close: string (nullable = true)

res79: results.type = [Symbol: string, OrigStringDate: string, ConvertedDate: date, Open: string, High: string, Low: string, Close: string]

+--------+--------------+-------------+-------+-------+-------+-------+

| Symbol|OrigStringDate|ConvertedDate| Open| High| Low| Close|

+--------+--------------+-------------+-------+-------+-------+-------+

|ADVA.IDX| 01-Jan-1996| 1996-01-01| 364| 364| 364| 364|

|ADVN.IDX| 01-Jan-1996| 1996-01-01| 1527| 1527| 1527| 1527|

|ADVQ.IDX| 01-Jan-1996| 1996-01-01| 1283| 1283| 1283| 1283|

|BANK.IDX| 01-Jan-1996| 1996-01-01|1009.41|1009.41|1009.41|1009.41|

| BKX.IDX| 01-Jan-1996| 1996-01-01| 39.39| 39.39| 39.39| 39.39|

|COMP.IDX| 01-Jan-1996| 1996-01-01|1052.13|1052.13|1052.13|1052.13|

| CPR.IDX| 01-Jan-1996| 1996-01-01| 1.261| 1.261| 1.261| 1.261|

|DECA.IDX| 01-Jan-1996| 1996-01-01| 205| 205| 205| 205|

|DECN.IDX| 01-Jan-1996| 1996-01-01| 825| 825| 825| 825|

|DECQ.IDX| 01-Jan-1996| 1996-01-01| 754| 754| 754| 754|

+--------+--------------+-------------+-------+-------+-------+-------+

only showing top 10 rows

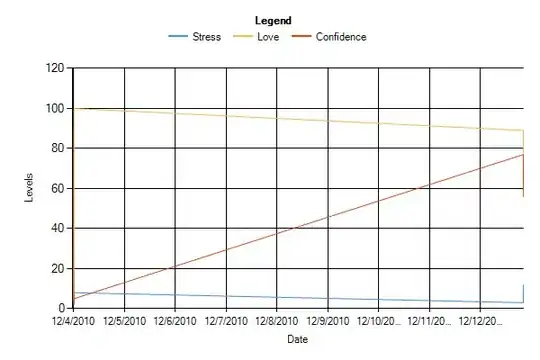

which looks ok, and I rerun my chart, to see if there are errors/spikes:

As you can see, no more spikes or errors. I now use a UDF as I've shown to apply my date format transformations to a standard yyyy-MM-dd format, and have not had spurious errors since. :-)