Is the calculated entropy from the gray-scale image (directly) same as the entropy feature extracted from the GLCM (a texture feature)?

No, these two entropies are rather different:

skimage.filters.rank.entropy(grayImg, disk(5)) yields an array the same size as grayImg which contains the local entropy across the image computed on a circular disk with center at the the corresponding pixel and radius 5 pixels. Take a look at Entropy (information theory) to find out how entropy is calculated. The values in this array are useful for segmentation (follow this link to see an example of entropy-based object detection). If your goal is to describe the entropy of the image through a single (scalar) value you can use skimage.measure.shannon_entropy(grayImg). This function basically applies the following formula to the full image:

where  is the number of gray levels (256 for 8-bit images),

is the number of gray levels (256 for 8-bit images),  is the probability of a pixel having gray level

is the probability of a pixel having gray level  , and

, and  is the base of the logarithm function. When

is the base of the logarithm function. When  is set to 2 the returned value is measured in bits.

is set to 2 the returned value is measured in bits.- A gray level co-occurence matrix (GLCM) is a histogram of co-occurring grayscale values at a given offset over an image. To describe the texture of an image it is usual to extract features such as entropy, energy, contrast, correlation, etc. from several co-occurrence matrices computed for different offsets. In this case the entropy is defined as follows:

where  and

and  are again the number of gray levels and the base of the logarithm function, respectively, and

are again the number of gray levels and the base of the logarithm function, respectively, and  stands for the probability of two pixels separated by the specified offset having intensities

stands for the probability of two pixels separated by the specified offset having intensities  and

and  . Unfortunately the entropy is not one of the properties of a GLCM that you can calculate through scikit-image*. If you wish to compute this feature you need to pass the GLCM to

. Unfortunately the entropy is not one of the properties of a GLCM that you can calculate through scikit-image*. If you wish to compute this feature you need to pass the GLCM to skimage.measure.shannon_entropy.

*At the time this post was last edited, the latest version of scikit-image is 0.13.1.

If not, what is the right way to extract all the texture features from an image?

There are a wide variety of features to describe the texture of an image, for example local binary patterns, Gabor filters, wavelets, Laws' masks and many others. Haralick's GLCM is one of the most popular texture descriptors. One possible approach to describe the texture of an image through GLCM features consists in computing the GLCM for different offsets (each offset is defined through a distance and an angle), and extracting different properties from each GLCM.

Let us consider for example three distances (1, 2 and 3 pixels), four angles (0, 45, 90 and 135 degrees) and two properties (energy and homogeneity). This results in  offsets (and hence 12 GLCM's) and a feature vector of dimension

offsets (and hence 12 GLCM's) and a feature vector of dimension  . Here's the code:

. Here's the code:

import numpy as np

from skimage import io, color, img_as_ubyte

from skimage.feature import greycomatrix, greycoprops

from sklearn.metrics.cluster import entropy

rgbImg = io.imread('https://i.stack.imgur.com/1xDvJ.jpg')

grayImg = img_as_ubyte(color.rgb2gray(rgbImg))

distances = [1, 2, 3]

angles = [0, np.pi/4, np.pi/2, 3*np.pi/4]

properties = ['energy', 'homogeneity']

glcm = greycomatrix(grayImg,

distances=distances,

angles=angles,

symmetric=True,

normed=True)

feats = np.hstack([greycoprops(glcm, prop).ravel() for prop in properties])

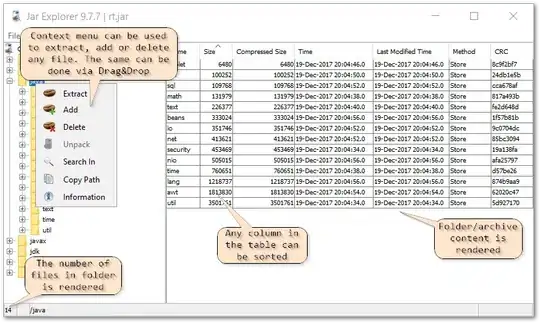

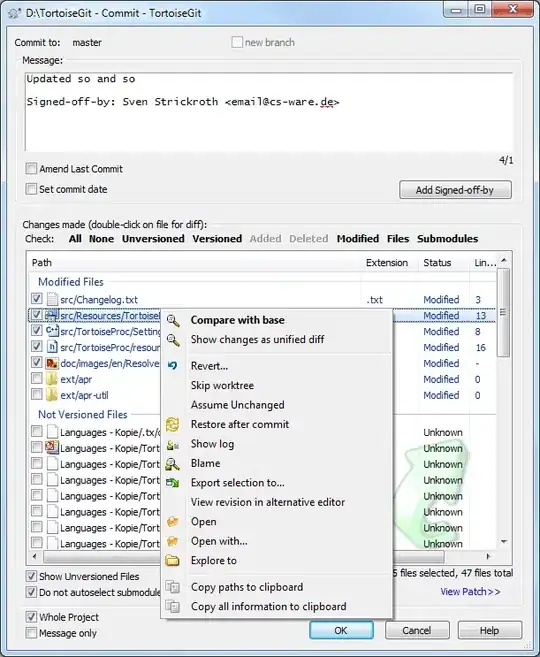

Results obtained using this image:

:

:

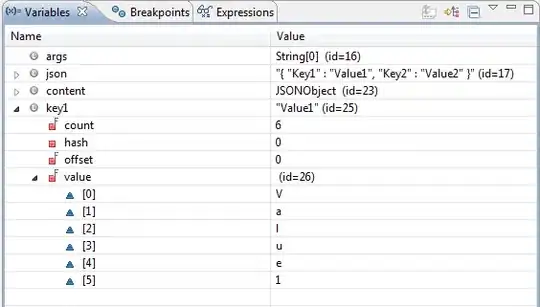

In [56]: entropy(grayImg)

Out[56]: 5.3864158185167534

In [57]: np.set_printoptions(precision=4)

In [58]: print(feats)

[ 0.026 0.0207 0.0237 0.0206 0.0201 0.0207 0.018 0.0206 0.0173

0.016 0.0157 0.016 0.3185 0.2433 0.2977 0.2389 0.2219 0.2433

0.1926 0.2389 0.1751 0.1598 0.1491 0.1565]

:

: