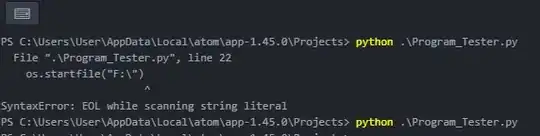

in the middle of project i am getting bellow error after invoking a function in my spark sql query

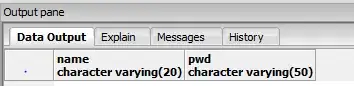

i have written a user define function which will take two string and concat them after concatenation it will take right most substring length of 5 depend on total string length(alternate method of right(string,integer) of sql server )

from pyspark.sql.types import*

def concatstring(xstring, ystring):

newvalstring = xstring+ystring

print newvalstring

if(len(newvalstring)==6):

stringvalue=newvalstring[1:6]

return stringvalue

if(len(newvalstring)==7):

stringvalue1=newvalstring[2:7]

return stringvalue1

else:

return '99999'

spark.udf.register ('rightconcat', lambda x,y:concatstring(x,y), StringType())

it works fine individually. now when i pass it in my spark sql query as column this exception occured the query is

the written query is

spark.sql("select d.BldgID,d.LeaseID,d.SuiteID,coalesce(BLDG.BLDGNAME,('select EmptyDefault from EmptyDefault')) as LeaseBldgName,coalesce(l.OCCPNAME,('select EmptyDefault from EmptyDefault'))as LeaseOccupantName, coalesce(l.DBA, ('select EmptyDefault from EmptyDefault')) as LeaseDBA, coalesce(l.CONTNAME, ('select EmptyDefault from EmptyDefault')) as LeaseContact,coalesce(l.PHONENO1, '')as LeasePhone1,coalesce(l.PHONENO2, '')as LeasePhone2,coalesce(l.NAME, '') as LeaseName,coalesce(l.ADDRESS, '') as LeaseAddress1,coalesce(l.ADDRESS2,'') as LeaseAddress2,coalesce(l.CITY, '')as LeaseCity, coalesce(l.STATE, ('select EmptyDefault from EmptyDefault'))as LeaseState,coalesce(l.ZIPCODE, '')as LeaseZip, coalesce(l.ATTENT, '') as LeaseAttention,coalesce(l.TTYPID, ('select EmptyDefault from EmptyDefault'))as LeaseTenantType,coalesce(TTYP.TTYPNAME, ('select EmptyDefault from EmptyDefault'))as LeaseTenantTypeName,l.OCCPSTAT as LeaseCurrentOccupancyStatus,l.EXECDATE as LeaseExecDate, l.RENTSTRT as LeaseRentStartDate,l.OCCUPNCY as LeaseOccupancyDate,l.BEGINDATE as LeaseBeginDate,l.EXPIR as LeaseExpiryDate,l.VACATE as LeaseVacateDate,coalesce(l.STORECAT, (select EmptyDefault from EmptyDefault)) as LeaseStoreCategory ,rightconcat('00000',cast(coalesce(SCAT.SORTSEQ,99999) as string)) as LeaseStoreCategorySortID from Dim_CMLease_primer d join LEAS l on l.BLDGID=d.BldgID and l.LEASID=d.LeaseID left outer join SUIT on SUIT.BLDGID=l.BLDGID and SUIT.SUITID=l.SUITID left outer join BLDG on BLDG.BLDGID= l.BLDGID left outer join SCAT on SCAT.STORCAT=l.STORECAT left outer join TTYP on TTYP.TTYPID = l.TTYPID").show()

i have uploaded the the query and after query state here. how could i solve this problem. Kindly guide me