I'm working with scrapy and dataset (https://dataset.readthedocs.io/en/latest/quickstart.html#storing-data) which is a layer on top of sqlalchemy , trying to load data into a sqllite table as a follow up to Sqlalchemy : Dynamically create table from Scrapy item.

using the dataset package I have:

class DynamicSQLlitePipeline(object):

def __init__(self,table_name):

db_path = "sqlite:///"+settings.SETTINGS_PATH+"\\data.db"

db = dataset.connect(db_path)

self.table = db[table_name].table

def process_item(self, item, spider):

try:

print('TEST DATASET..')

self.table.insert(dict(name='John Doe', age=46, country='China'))

print('INSERTED')

except IntegrityError:

print('THIS IS A DUP')

return item

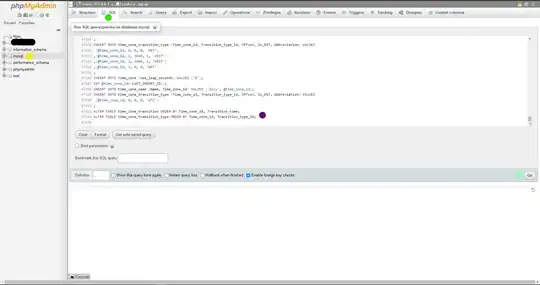

after running my spider I see the print statements printed out in the try except block, with no errors, but after completion , I look in the table and see the screenshot. No data is in the table. What am I doing wrong?