Starting with some "lookup" data, there are two approaches:

Method #1 -- using a lookup DataFrame

// use a DataFrame (via a join)

val lookupDF = sc.parallelize(Seq(

("banana", "yellow"),

("apple", "red"),

("grape", "purple"),

("blueberry","blue")

)).toDF("SomeKeys","SomeValues")

Method #2 -- using a map in a UDF

// turn the above DataFrame into a map which a UDF uses

val Keys = lookupDF.select("SomeKeys").collect().map(_(0).toString).toList

val Values = lookupDF.select("SomeValues").collect().map(_(0).toString).toList

val KeyValueMap = Keys.zip(Values).toMap

def ThingToColor(key: String): String = {

if (key == null) return ""

val firstword = key.split(" ")(0) // fragile!

val result: String = KeyValueMap.getOrElse(firstword,"not found!")

return (result)

}

val ThingToColorUDF = udf( ThingToColor(_: String): String )

Take a sample data frame of things that will be looked up:

val thingsDF = sc.parallelize(Seq(

("blueberry muffin"),

("grape nuts"),

("apple pie"),

("rutabaga pudding")

)).toDF("SomeThings")

Method #1 is to join on the lookup DataFrame

Here, the rlike is doing the matching. And null appears where that does not work. Both columns of the lookup DataFrame get added.

val result_1_DF = thingsDF.join(lookupDF, expr("SomeThings rlike SomeKeys"),

"left_outer")

Method #2 is to add a column using the UDF

Here, only 1 column is added. And the UDF can return a non-Null value. However, if the lookup data is very large it may fail to "serialize" as required to send to the workers in the cluster.

val result_2_DF = thingsDF.withColumn("AddValues",ThingToColorUDF($"SomeThings"))

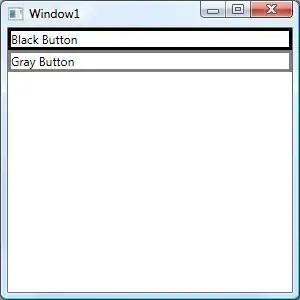

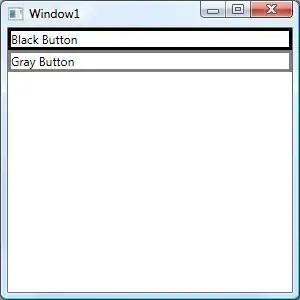

Which gives you:

In my case I had some lookup data that was over 1 million values, so Method #1 was my only choice.