Assume a system with multiple concurrent producers that each strives to persist some graph of objects with the following common entities uniquely identifiable by their names:

CREATE TABLE CommonEntityGroup(

Id INT NOT NULL IDENTITY(1, 1) PRIMARY KEY,

Name NVARCHAR(100) NOT NULL

);

GO

CREATE UNIQUE INDEX IX_CommonEntityGroup_Name

ON CommonEntityGroup(Name)

GO

CREATE TABLE CommonEntity(

Id INT NOT NULL IDENTITY(1, 1) PRIMARY KEY,

Name NVARCHAR(100) NOT NULL,

CommonEntityGroupId INT NOT NULL,

CONSTRAINT FK_CommonEntity_CommonEntityGroup FOREIGN KEY(CommonEntityGroupId)

REFERENCES CommonEntityGroup(Id)

);

GO

CREATE UNIQUE INDEX IX_CommonEntity_CommonEntityGroupId_Name

ON CommonEntity(CommonEntityGroupId, Name)

GO

For example, producer A saves some CommonEntityMeetings, while producer B saves CommonEntitySets. Either of them has to persist CommonEntitys related to their particular items.

Basically, the key points are:

- There are independent producers.

- They operate concurrently.

- Theoretically(though that may change and is not yet exactly true now) they will operate through the same Web Service (ASP.Net Web API), just with their respective endpoints/"resources". So ideally proposed solution should not rely on that.

- They strive to persist different graphs of objects that contain possibly not yet existing CommonEntity/CommonEntityGroup objects.

- CommonEntity/CommonEntityGroup are immutable once created and will never be modified or removed thereafter.

- CommonEntity/CommonEntityGroup are unique according to some of their properties (

Nameand related common entity if any(e.g.CommonEntityis unique byCommonEntity.Name+CommonEntityGroup.Name)). - Producers do not know/care about IDs of those

CommonEntities- they usually just pass DTOs withNames(unique) of thoseCommonEntitiesand related information. So anyCommon(Group)Entityhas to be found/created byName. - There is a certain possibility that producers will try to create the same CommonEntity/CommonEntityGroup at the same time.

- Though it is much more likely that such CommonEntity/CommonEntityGroup objects will already exist in db.

So, with Entity Framework(database first, though it probably doesn't matter) as DAL and SQL Server as storage what is an efficient and reliable way to ensure that all those producers will successfully persist their intersecting object graphs at the same time?

Taking into account that UNIQUE INDEX already ensures that there won't be duplicate CommonEntities (Name, GroupName pair is unique) I can see the following solutions:

- Ensure that each CommonEntity/CommonGroupEntity is found/created+SaveChanged() before building the rest of the object's graph.

In such a case when SaveChanges is called for related entities there won't be any index violations due to other producers creating the same entities a moment before.

To achieve it I will have some

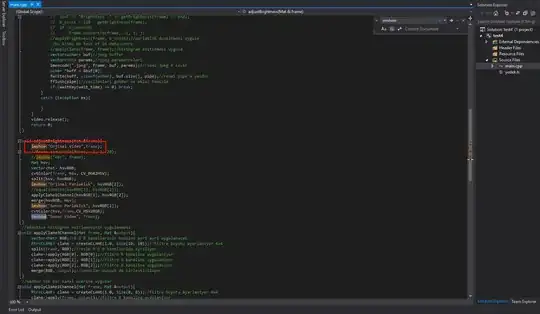

public class CommonEntityGroupRepository // sort of

{

public CommonEntityGroupRepository(EntitiesDbContext db) ...

// CommonEntityRepository will use this class/method internally to create parent CommonEntityGroup.

public CommonEntityGroup FindOrCreateAndSave(String groupName)

{

return

this.TryFind(groupName) ?? // db.FirstOrDefault(...)

this.CreateAndSave(groupName);

}

private CommonEntityGroup CreateAndSave(String groupName)

{

var group = this.Db.CommonEntityGroups.Create();

group.Name = groupName;

this.Db.CommonGroups.Add(group)

try

{

this.Db.SaveChanges();

return group;

}

catch (DbUpdateException dbExc)

{

// Check that it was Name Index violation (perhaps make indices IGNORE_DUP_KEY)

return this.Find(groupName); // TryFind that throws exception.

}

}

}

With this approach there will be multiple calls to SaveChanges and each CommonEntity will have its own sort of a Repository, though it seems to be the most reliable solution.

- Just create the entire graph and rebuild it from scratch if Index violations occur

A bit ugly and inefficient (with 10 CommonEntities we may have to retry it 10 times), but simple and more or less reliable.

- Just create the entire graph and replace duplicate entries if Index violations occur

Not sure that there is an easy and reliable way to replace duplicate entries in more or less complex object graphs, though both case specific and more generic reflection-based solution can be implemented.

Still, like a previous solution it may require multiple retries.

- Try to move this logic to database (SP)

Doubt that it will be any easier to handle inside stored procedure. It will be the same optimistic or pessimistic approaches just implemented on database side.

Though it may provide better performance(not an issue in this case) and put the insertion logic into one common place.

- Using SERIALIZABLE isolation level/TABLOCKX+SERIALIZABLE table hint in Stored Procedure - it should definitely work, but I'd prefer not to lock the tables exclusively more than it is actually necessary, because actual race is quite rare. And as it is already mentioned in the title, I'd like to find some optimistic concurrency approach.

I would probably try the first solution, but perhaps there are better alternatives or some potential pitfalls.