I'm trying to implement the gradient descent algorithm from scratch on a toy problem. My code always returns a vector of NaN's:

from sklearn.linear_model import LinearRegression

import numpy as np

import matplotlib.pyplot as plt

np.random.seed(45)

x = np.linspace(0, 1000, num=1000)

y = 3*x + 2 + np.random.randn(len(x))

# sklearn output - This works (returns intercept = 1.6, coef = 3)

lm = LinearRegression()

lm.fit(x.reshape(-1, 1), y.reshape(-1, 1))

print("Intercept = {:.2f}, Coef = {:.2f}".format(lm.coef_[0][0], lm.intercept_[0]))

# BGD output

theta = np.array((0, 0)).reshape(-1, 1)

X = np.hstack([np.ones_like(x.reshape(-1, 1)), x.reshape(-1, 1)]) # [1, x]

Y = y.reshape(-1, 1) # Column vector

alpha = 0.05

for i in range(100):

# Update: theta <- theta - alpha * [X.T][X][theta] - [X.T][Y]

h = np.dot(X, theta) # Hypothesis

loss = h - Y

theta = theta - alpha*np.dot(X.T, loss)

theta

The sklearn part runs fine, so I must be doing something wrong in the for loop. I've tried various different alpha values and none of them converge.

The problem is theta keeps getting bigger and bigger throughout the loop, and eventually becomes too big for python to store.

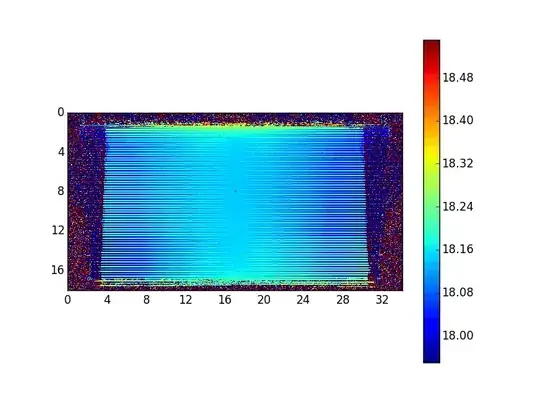

Here's a contour plot of the cost function:

J = np.dot((np.dot(X, theta) - y).T, (np.dot(X, theta) - y))

plt.contour(J)

Clearly there's no minimum here. Where have I gone wrong?

Thanks