I am totally new to OpenCV and I have started to dive into it. But I'd need a little bit of help.

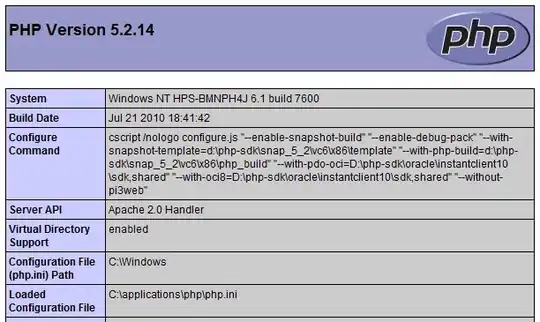

So I want to combine these 2 images:

I would like the 2 images to match along their edges (ignoring the very right part of the image for now)

Can anyone please point me into the right direction? I have tried using the findTransformECC function. Here's my implementation:

cv::Mat im1 = [imageArray[1] CVMat3];

cv::Mat im2 = [imageArray[0] CVMat3];

// Convert images to gray scale;

cv::Mat im1_gray, im2_gray;

cvtColor(im1, im1_gray, CV_BGR2GRAY);

cvtColor(im2, im2_gray, CV_BGR2GRAY);

// Define the motion model

const int warp_mode = cv::MOTION_AFFINE;

// Set a 2x3 or 3x3 warp matrix depending on the motion model.

cv::Mat warp_matrix;

// Initialize the matrix to identity

if ( warp_mode == cv::MOTION_HOMOGRAPHY )

warp_matrix = cv::Mat::eye(3, 3, CV_32F);

else

warp_matrix = cv::Mat::eye(2, 3, CV_32F);

// Specify the number of iterations.

int number_of_iterations = 50;

// Specify the threshold of the increment

// in the correlation coefficient between two iterations

double termination_eps = 1e-10;

// Define termination criteria

cv::TermCriteria criteria (cv::TermCriteria::COUNT+cv::TermCriteria::EPS, number_of_iterations, termination_eps);

// Run the ECC algorithm. The results are stored in warp_matrix.

findTransformECC(

im1_gray,

im2_gray,

warp_matrix,

warp_mode,

criteria

);

// Storage for warped image.

cv::Mat im2_aligned;

if (warp_mode != cv::MOTION_HOMOGRAPHY)

// Use warpAffine for Translation, Euclidean and Affine

warpAffine(im2, im2_aligned, warp_matrix, im1.size(), cv::INTER_LINEAR + cv::WARP_INVERSE_MAP);

else

// Use warpPerspective for Homography

warpPerspective (im2, im2_aligned, warp_matrix, im1.size(),cv::INTER_LINEAR + cv::WARP_INVERSE_MAP);

UIImage* result = [UIImage imageWithCVMat:im2_aligned];

return result;

I have tried playing around with the termination_eps and number_of_iterations and increased/decreased those values, but they didn't really make a big difference.

So here's the result:

What can I do to improve my result?

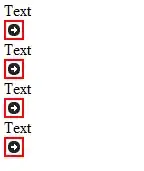

EDIT: I have marked the problematic edges with red circles. The goal is to warp the bottom image and make it match with the lines from the image above:

I did a little bit of research and I'm afraid the findTransformECC function won't give me the result I'd like to have :-(

Something important to add:

I actually have an array of those image "stripes", 8 in this case, they all look similar to the images shown here and they all need to be processed to match the line. I have tried experimenting with the stitch function of OpenCV, but the results were horrible.

EDIT:

Here are the 3 source images:

The result should be something like this:

I transformed every image along the lines that should match. Lines that are too far away from each other can be ignored (the shadow and the piece of road on the right portion of the image)