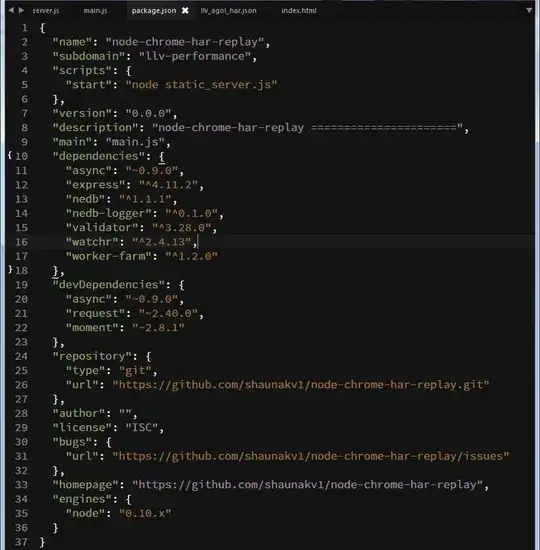

I am trying to train fcn32. I am training voc-fcn32s model for my own data that has imbalanced class number. This is the learning curve for 18,000 iterations:

As you can see training is diminishing in some points and then it is fluctuating. I read some online recommendations that they are suggesting reducing the learning rate or changing the bias value in convolution layers for fillers. So, what I did, is that I changed the train_val.prototxt as follows for these two layers:

....

layer {

name: "score_fr"

type: "Convolution"

bottom: "fc7"

top: "score_fr"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 5 # the number of classes

pad: 0

kernel_size: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0.5 #+

}

}

}

layer {

name: "upscore"

type: "Deconvolution"

bottom: "score_fr"

top: "upscore"

param {

lr_mult: 0

}

convolution_param {

num_output: 5 # the number of classes

bias_term: true #false

kernel_size: 64

stride: 32

group: 5 #2

weight_filler: {

type: "bilinear"

value:0.5 #+

}

}

}

....

It seems not much thing has changed in the behavior of the model.

1) Am doing the right way to add these values to weight_filler?

2) Should I change the learning policy in the solver from fixed to step by reducing by the factor of 10 each time? Will it help to tackle this issue?

I am worried that I am doing the wrong things and my model cannot converge. Does anyone have any suggestion about this? What important things I should consider while training model? What kind of changes can I do on solver and train_val that model to be converged?

I really appreciate your help.

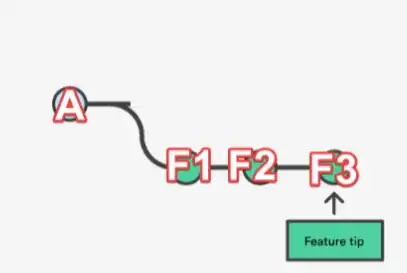

More details after adding BatchNorm layer

Thanks @Shai and @Jonathan for suggesting to add batchNorm layers.

I added Batch Normalization Layers before reLU layers, this one sample layer:

layer {

name: "conv1_1"

type: "Convolution"

bottom: "data"

top: "conv1_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 64

pad: 100

kernel_size: 3

stride: 1

}

}

layer {

name: "bn1_1"

type: "BatchNorm"

bottom: "conv1_1"

top: "bn1_1"

batch_norm_param {

use_global_stats: false

}

param {

lr_mult: 0

}

include {

phase: TRAIN

}

}

layer {

name: "bn1_1"

type: "BatchNorm"

bottom: "conv1_1"

top: "bn1_1"

batch_norm_param {

use_global_stats: true

}

param {

lr_mult: 0

}

include {

phase: TEST

}

}

layer {

name: "scale1_1"

type: "Scale"

bottom: "bn1_1"

top: "bn1_1"

scale_param {

bias_term: true

}

}

layer {

name: "relu1_1"

type: "ReLU"

bottom: "bn1_1"

top: "bn1_1"

}

layer {

name: "conv1_2"

type: "Convolution"

bottom: "bn1_1"

top: "conv1_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 64

pad: 1

kernel_size: 3

stride: 1

}

}

As far as I knew from docs, I can only add one parameter in Batch normalization instead of three since I have single channel images. Is this my understanding true? as follows:

param {

lr_mult: 0

}

Should I add more parameters to scale layer, as documentation is mentioning? What are the meaning of these parameters in Scale layer? like:

layer { bottom: 'layerx-bn' top: 'layerx-bn' name: 'layerx-bn-scale' type: 'Scale',

scale_param {

bias_term: true

axis: 1 # scale separately for each channel

num_axes: 1 # ... but not spatially (default)

filler { type: 'constant' value: 1 } # initialize scaling to 1

bias_filler { type: 'constant' value: 0.001 } # initialize bias

}}

and this is  of the network. I am not sure how much I am wrong/right. Have I added correctly?

The other question is about debug_info. What is the meaning of these lines of log file after activating

of the network. I am not sure how much I am wrong/right. Have I added correctly?

The other question is about debug_info. What is the meaning of these lines of log file after activating debug_info? What does it mean of diff and data? And why the values are 0? Is my net working correctly?

I0123 23:17:49.498327 15230 solver.cpp:228] Iteration 50, loss = 105465

I0123 23:17:49.498337 15230 solver.cpp:244] Train net output #0: accuracy = 0.643982

I0123 23:17:49.498349 15230 solver.cpp:244] Train net output #1: loss = 105446 (* 1 = 105446 loss)

I0123 23:17:49.498359 15230 sgd_solver.cpp:106] Iteration 50, lr = 1e-11

I0123 23:19:12.680325 15230 net.cpp:608] [Forward] Layer data, top blob data data: 34.8386

I0123 23:19:12.680615 15230 net.cpp:608] [Forward] Layer data_data_0_split, top blob data_data_0_split_0 data: 34.8386

I0123 23:19:12.680670 15230 net.cpp:608] [Forward] Layer data_data_0_split, top blob data_data_0_split_1 data: 34.8386

I0123 23:19:12.680778 15230 net.cpp:608] [Forward] Layer label, top blob label data: 0

I0123 23:19:12.680829 15230 net.cpp:608] [Forward] Layer label_label_0_split, top blob label_label_0_split_0 data: 0

I0123 23:19:12.680896 15230 net.cpp:608] [Forward] Layer label_label_0_split, top blob label_label_0_split_1 data: 0

I0123 23:19:12.688591 15230 net.cpp:608] [Forward] Layer conv1_1, top blob conv1_1 data: 0

I0123 23:19:12.688695 15230 net.cpp:620] [Forward] Layer conv1_1, param blob 0 data: 0

I0123 23:19:12.688742 15230 net.cpp:620] [Forward] Layer conv1_1, param blob 1 data: 0

I0123 23:19:12.721791 15230 net.cpp:608] [Forward] Layer bn1_1, top blob bn1_1 data: 0

I0123 23:19:12.721853 15230 net.cpp:620] [Forward] Layer bn1_1, param blob 0 data: 0

I0123 23:19:12.721890 15230 net.cpp:620] [Forward] Layer bn1_1, param blob 1 data: 0

I0123 23:19:12.721901 15230 net.cpp:620] [Forward] Layer bn1_1, param blob 2 data: 96.1127

I0123 23:19:12.996196 15230 net.cpp:620] [Forward] Layer scale4_1, param blob 0 data: 1

I0123 23:19:12.996237 15230 net.cpp:620] [Forward] Layer scale4_1, param blob 1 data: 0

I0123 23:19:12.996939 15230 net.cpp:608] [Forward] Layer relu4_1, top blob bn4_1 data: 0

I0123 23:19:13.012020 15230 net.cpp:608] [Forward] Layer conv4_2, top blob conv4_2 data: 0

I0123 23:19:13.012403 15230 net.cpp:620] [Forward] Layer conv4_2, param blob 0 data: 0

I0123 23:19:13.012446 15230 net.cpp:620] [Forward] Layer conv4_2, param blob 1 data: 0

I0123 23:19:13.015959 15230 net.cpp:608] [Forward] Layer bn4_2, top blob bn4_2 data: 0

I0123 23:19:13.016005 15230 net.cpp:620] [Forward] Layer bn4_2, param blob 0 data: 0

I0123 23:19:13.016046 15230 net.cpp:620] [Forward] Layer bn4_2, param blob 1 data: 0

I0123 23:19:13.016054 15230 net.cpp:620] [Forward] Layer bn4_2, param blob 2 data: 96.1127

I0123 23:19:13.017211 15230 net.cpp:608] [Forward] Layer scale4_2, top blob bn4_2 data: 0

I0123 23:19:13.017251 15230 net.cpp:620] [Forward] Layer scale4_2, param blob 0 data: 1

I0123 23:19:13.017292 15230 net.cpp:620] [Forward] Layer scale4_2, param blob 1 data: 0

I0123 23:19:13.017980 15230 net.cpp:608] [Forward] Layer relu4_2, top blob bn4_2 data: 0

I0123 23:19:13.032080 15230 net.cpp:608] [Forward] Layer conv4_3, top blob conv4_3 data: 0

I0123 23:19:13.032452 15230 net.cpp:620] [Forward] Layer conv4_3, param blob 0 data: 0

I0123 23:19:13.032493 15230 net.cpp:620] [Forward] Layer conv4_3, param blob 1 data: 0

I0123 23:19:13.036018 15230 net.cpp:608] [Forward] Layer bn4_3, top blob bn4_3 data: 0

I0123 23:19:13.036064 15230 net.cpp:620] [Forward] Layer bn4_3, param blob 0 data: 0

I0123 23:19:13.036105 15230 net.cpp:620] [Forward] Layer bn4_3, param blob 1 data: 0

I0123 23:19:13.036114 15230 net.cpp:620] [Forward] Layer bn4_3, param blob 2 data: 96.1127

I0123 23:19:13.038148 15230 net.cpp:608] [Forward] Layer scale4_3, top blob bn4_3 data: 0

I0123 23:19:13.038189 15230 net.cpp:620] [Forward] Layer scale4_3, param blob 0 data: 1

I0123 23:19:13.038230 15230 net.cpp:620] [Forward] Layer scale4_3, param blob 1 data: 0

I0123 23:19:13.038969 15230 net.cpp:608] [Forward] Layer relu4_3, top blob bn4_3 data: 0

I0123 23:19:13.039417 15230 net.cpp:608] [Forward] Layer pool4, top blob pool4 data: 0

I0123 23:19:13.043354 15230 net.cpp:608] [Forward] Layer conv5_1, top blob conv5_1 data: 0

I0123 23:19:13.128515 15230 net.cpp:608] [Forward] Layer score_fr, top blob score_fr data: 0.000975524

I0123 23:19:13.128569 15230 net.cpp:620] [Forward] Layer score_fr, param blob 0 data: 0.0135222

I0123 23:19:13.128607 15230 net.cpp:620] [Forward] Layer score_fr, param blob 1 data: 0.000975524

I0123 23:19:13.129696 15230 net.cpp:608] [Forward] Layer upscore, top blob upscore data: 0.000790174

I0123 23:19:13.129734 15230 net.cpp:620] [Forward] Layer upscore, param blob 0 data: 0.25

I0123 23:19:13.130656 15230 net.cpp:608] [Forward] Layer score, top blob score data: 0.000955503

I0123 23:19:13.130709 15230 net.cpp:608] [Forward] Layer score_score_0_split, top blob score_score_0_split_0 data: 0.000955503

I0123 23:19:13.130754 15230 net.cpp:608] [Forward] Layer score_score_0_split, top blob score_score_0_split_1 data: 0.000955503

I0123 23:19:13.146767 15230 net.cpp:608] [Forward] Layer accuracy, top blob accuracy data: 1

I0123 23:19:13.148967 15230 net.cpp:608] [Forward] Layer loss, top blob loss data: 105320

I0123 23:19:13.149173 15230 net.cpp:636] [Backward] Layer loss, bottom blob score_score_0_split_1 diff: 0.319809

I0123 23:19:13.149323 15230 net.cpp:636] [Backward] Layer score_score_0_split, bottom blob score diff: 0.319809

I0123 23:19:13.150310 15230 net.cpp:636] [Backward] Layer score, bottom blob upscore diff: 0.204677

I0123 23:19:13.152452 15230 net.cpp:636] [Backward] Layer upscore, bottom blob score_fr diff: 253.442

I0123 23:19:13.153218 15230 net.cpp:636] [Backward] Layer score_fr, bottom blob bn7 diff: 9.20469

I0123 23:19:13.153254 15230 net.cpp:647] [Backward] Layer score_fr, param blob 0 diff: 0

I0123 23:19:13.153291 15230 net.cpp:647] [Backward] Layer score_fr, param blob 1 diff: 20528.8

I0123 23:19:13.153420 15230 net.cpp:636] [Backward] Layer drop7, bottom blob bn7 diff: 9.21666

I0123 23:19:13.153554 15230 net.cpp:636] [Backward] Layer relu7, bottom blob bn7 diff: 0

I0123 23:19:13.153856 15230 net.cpp:636] [Backward] Layer scale7, bottom blob bn7 diff: 0

E0123 23:19:14.382714 15230 net.cpp:736] [Backward] All net params (data, diff): L1 norm = (19254.6, 102644); L2 norm = (391.485, 57379.6)

I really appreciate if someone knows, please share ideas/links/resources here. Thanks again