For context, I wrote this algorithm to get the number of unique substrings of any string. It builds the suffix tree for the string counting the nodes it contains and returns that as the answer. The problem I wanted to solve required a O(n) algorithm so this question is only about how this code behaves and not about how bad it is at what it does.

struct node{

char value = ' ';

vector<node*> children;

~node()

{

for (node* child: children)

{

delete child;

}

}

};

int numberOfUniqueSubstrings(string aString, node*& root)

{

root = new node();

int substrings = 0;

for (int i = 0; i < aString.size(); ++i)

{

string tmp = aString.substr(i, aString.size());

node* currentNode = root;

char indexToNext = 0;

for (int j = 0; j < currentNode->children.size(); ++j)

{

if (currentNode->children[j]->value == tmp[indexToNext])

{

currentNode = currentNode->children[j];

j = -1;

indexToNext++;

}

}

for (int j = indexToNext; j < tmp.size(); ++j)

{

node* theNewNode = new node;

theNewNode->value = tmp[j];

currentNode->children.push_back(theNewNode);

currentNode = theNewNode;

substrings++;

}

}

return substrings;

}

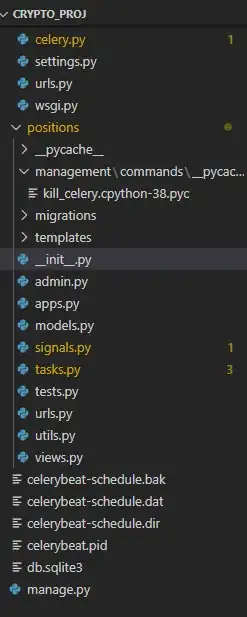

I decided to benchmark this algorithm for which I simply looped over a big string taking a bigger substring each iteration, calling numberOfUniqueSusbstrings measuring how long it took to end.

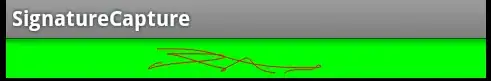

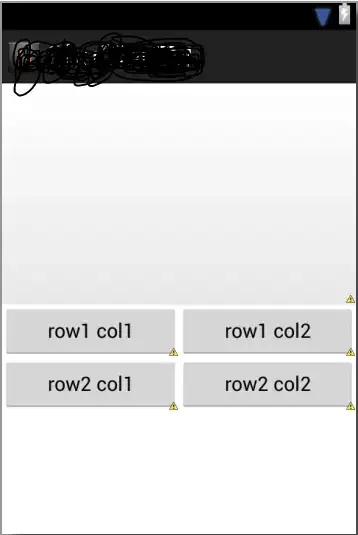

I plotted it in octave and this is what I got (x is string size and y is time in microseconds)

I first thought the problem lied in the input string but it's just an alphanumeric string I got from a book (any other text behaves just as strangely).

Also tried averaging many calls to the function with the same parameter and the result is pretty much the same.

This is compiling with g++ problem.cpp -std=c++14 -O3 but seems to do the same on -O2 and -O0.

Edit: After @interjay 's answer I've tried doing just that which leaves the function as:

int numberOfUniqueSubstrings(string aString, node*& root)

{

root = new node();

int substrings = 0;

for (int i = 0; i < aString.size(); ++i)

{

node* currentNode = root;

char indexToNext = i;

for (int j = 0; j < currentNode->children.size(); ++j)

{

if (currentNode->children[j]->value == aString[indexToNext])

{

currentNode = currentNode->children[j];

j = -1;

indexToNext++;

}

}

for (int j = indexToNext; j < aString.size(); ++j)

{

node* theNewNode = new node;

theNewNode->value = aString[j];

currentNode->children.push_back(theNewNode);

currentNode = theNewNode;

substrings++;

}

}

return substrings;

}

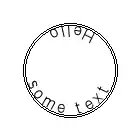

And it does indeed make it a bit faster. But not less strange for I plotted this:

Something is happening at x = 1000 and I have no clue what it could be.

Another plot for good measure:

I've now run gprof for a string of size 999:

Flat profile:

Each sample counts as 0.01 seconds.

% cumulative self self total

time seconds seconds calls us/call us/call name

100.15 0.02 0.02 974 20.56 20.56 node::~node()

0.00 0.02 0.00 498688 0.00 0.00 void std::vector<node*, std::allocator<node*> >::_M_emplace_back_aux<node* const&>(node* const&)

0.00 0.02 0.00 1 0.00 0.00 _GLOBAL__sub_I__Z7imprimePK4node

0.00 0.02 0.00 1 0.00 0.00 numberOfUniqueSubstrings(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, node*&)

^L

Call graph

granularity: each sample hit covers 2 byte(s) for 49.93% of 0.02 seconds

index % time self children called name

54285 node::~node() [1]

0.02 0.00 974/974 test(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) [2]

[1] 100.0 0.02 0.00 974+54285 node::~node() [1]

54285 node::~node() [1]

-----------------------------------------------

<spontaneous>

[2] 100.0 0.00 0.02 test(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) [2]

0.02 0.00 974/974 node::~node() [1]

0.00 0.00 1/1 numberOfUniqueSubstrings(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, node*&) [12]

-----------------------------------------------

0.00 0.00 498688/498688 numberOfUniqueSubstrings(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, node*&) [12]

[10] 0.0 0.00 0.00 498688 void std::vector<node*, std::allocator<node*> >::_M_emplace_back_aux<node* const&>(node* const&) [10]

-----------------------------------------------

0.00 0.00 1/1 __libc_csu_init [21]

[11] 0.0 0.00 0.00 1 _GLOBAL__sub_I__Z7imprimePK4node [11]

-----------------------------------------------

0.00 0.00 1/1 test(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) [2]

[12] 0.0 0.00 0.00 1 numberOfUniqueSubstrings(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, node*&) [12]

0.00 0.00 498688/498688 void std::vector<node*, std::allocator<node*> >::_M_emplace_back_aux<node* const&>(node* const&) [10]

-----------------------------------------------

And for a string of size 1001:

Flat profile:

Each sample counts as 0.01 seconds.

% cumulative self self total

time seconds seconds calls us/call us/call name

100.15 0.02 0.02 974 20.56 20.56 node::~node()

0.00 0.02 0.00 498688 0.00 0.00 void std::vector<node*, std::allocator<node*> >::_M_emplace_back_aux<node* const&>(node* const&)

0.00 0.02 0.00 1 0.00 0.00 _GLOBAL__sub_I__Z7imprimePK4node

0.00 0.02 0.00 1 0.00 0.00 numberOfUniqueSubstrings(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, node*&)

Call graph

granularity: each sample hit covers 2 byte(s) for 49.93% of 0.02 seconds

index % time self children called name

54285 node::~node() [1]

0.02 0.00 974/974 test(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) [2]

[1] 100.0 0.02 0.00 974+54285 node::~node() [1]

54285 node::~node() [1]

-----------------------------------------------

<spontaneous>

[2] 100.0 0.00 0.02 test(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) [2]

0.02 0.00 974/974 node::~node() [1]

0.00 0.00 1/1 numberOfUniqueSubstrings(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, node*&) [12]

-----------------------------------------------

0.00 0.00 498688/498688 numberOfUniqueSubstrings(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, node*&) [12]

[10] 0.0 0.00 0.00 498688 void std::vector<node*, std::allocator<node*> >::_M_emplace_back_aux<node* const&>(node* const&) [10]

-----------------------------------------------

0.00 0.00 1/1 __libc_csu_init [21]

[11] 0.0 0.00 0.00 1 _GLOBAL__sub_I__Z7imprimePK4node [11]

-----------------------------------------------

0.00 0.00 1/1 test(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >) [2]

[12] 0.0 0.00 0.00 1 numberOfUniqueSubstrings(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, node*&) [12]

0.00 0.00 498688/498688 void std::vector<node*, std::allocator<node*> >::_M_emplace_back_aux<node* const&>(node* const&) [10]

-----------------------------------------------

Index by function name

[11] _GLOBAL__sub_I__Z7imprimePK4node [1] node::~node()

[12] numberOfUniqueSubstrings(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, node*&) [10] void std::vector<node*, std::allocator<node*> >::_M_emplace_back_aux<node* const&>(node* const&)

However it seems that running the profiler removes the effect and the times are pretty much the same in both cases.