I have to following data:

attributes <- c("apple-water-orange", "apple-water", "apple-orange", "coffee", "coffee-croissant", "green-red-yellow", "green-red-blue", "green-red","black-white","black-white-purple")

attributes

attributes

1 apple-water-orange

2 apple-water

3 apple-orange

4 coffee

5 coffee-croissant

6 green-red-yellow

7 green-red-blue

8 green-red

9 black-white

10 black-white-purple

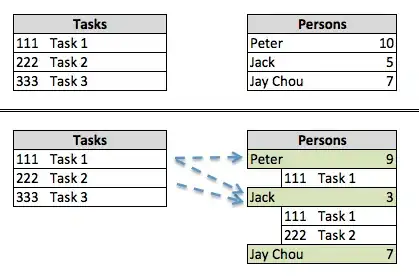

What I want is another column, that assigns a category to each row, based on observation similarity.

category <- c(1,1,1,2,2,3,3,3,4,4)

df <- as.data.frame(cbind(df, category))

attributes category

1 apple-water-orange 1

2 apple-water 1

3 apple-orange 1

4 coffee 2

5 coffee-croissant 2

6 green-red-yellow 3

7 green-red-blue 3

8 green-red 3

9 black-white 4

10 black-white-purple 4

It is clustering in the broader sense, but I think most clustering methods are for numeric data only and one-hot-encoding has a lot of disadvantages (thats what I read on the internet).

Does anyone have an idea how to do this task? Maybe some word-matching approaches?

It would be also great if I could adjust degree of similarity (rough vs. decent "clustering") based on a parameter.

Thanks in advance for any idea!