In addition to the prior answers:

System.Random should NEVER be used in simulations or numerical solvers for science and engineering, where there are material negative consequences of inaccurate simulation results or convergence failure. This is because Microsoftʼs implementation is deeply flawed in several respects, and they cannot (or will not) easily fix it due to compatibility issues. See this post.

So:

If there is an adversary who shouldnʼt know the generated sequence, then use RNGCryptoServiceProvider or another carefully designed, implemented and validated cryptographically strong RNG, and ideally use hardware randomness where possible. Otherwise;

If it is an application such as a simulation that requires good statistical properties, then use a carefully designed and implemented non‑crypto PRNG such as the Mersenne Twister. (A crypto RNG would also be correct in these cases, but often too slow and unwieldy.) Otherwise;

ONLY if the use of the numbers is completely trivial, such as deciding which picture to show next in a randomized slideshow, then use System.Random.

I recently encountered this issue very tangibly when working on a Monte Carlo simulation intended to test the effects of different usage patterns for medical devices. The simulation produced results that went mildly in the opposite direction of what would have been reasonably expected.

Sometimes when you canʼt explain something, thereʼs a reason behind it, and that reason could be very onerous!

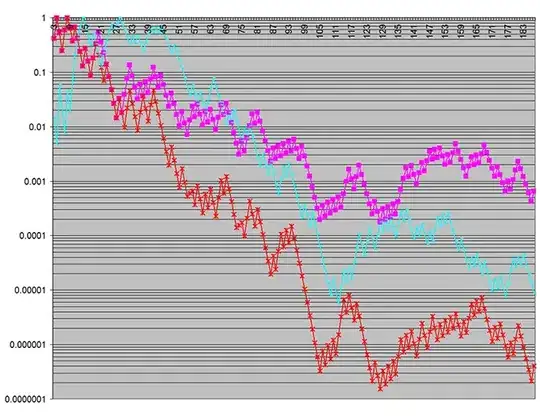

Hereʼs a plot of the p‑values that were obtained over an increasing number of simulation batches:

The red and magenta plots show the statistical significance of differences between the two usage models in two output metrics under study.

The cyan plot is a particularly shocking result, because it represents p‑values for a characteristic of the random input to the simulation. (This was being plotted just to confirm the input wasnʼt faulty.) The input was, of course, by design the same between the two usage models under study, so there should not have been any statistically significant difference between the input to the two models. Yet here I was seeing better than 99.97% confidence that there was such a difference!!

Initially I thought there was something wrong in my code, but everything checked out. (In particular I confirmed that threads were not sharing System.Random instances.) When repeated testing found this unexpected result to be highly consistent, I started suspecting System.Random.

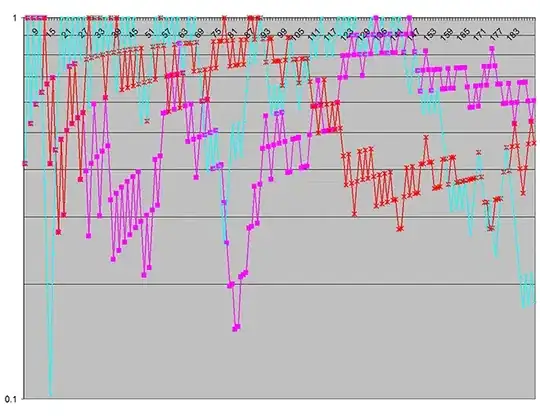

I replaced System.Random with a Mersenne Twister implementation — no other changes, — and immediately the output became drastically different, as shown here:

This chart reflects there being no statistically significant difference between the two usage models for the parameters being used in this particular test set. This was an expected result.

Note that in the first chart, the vertical log scale (on the p‑value) covers seven decades, whereas there is only a single decade in the second — demonstrating just how pronounced the statistical significance of the spurious discrepancies was! (The vertical scale indicates the probability the discrepancies could have arisen by chance.)

I suspect what was happening was that System.Random has some correlations over some fairly short generator cycle, and different patterns of internal randomness sampling between the two models under test (which had substantially different numbers of calls to Random.Next) caused these to affect the two models in distinct ways.

It so happens that the simulation input draws from the same RNG streams as the models use for internal decisions, and this apparently caused these sampling discrepancies to impact the input. (This was actually a lucky thing, because otherwise I may not have realized that the unexpected result was a software fault and not some real property of the devices being simulated!)