My sample code for reading text file is

val text = sc.hadoopFile(path, classOf[TextInputFormat], classOf[LongWritable], classOf[Text], sc.defaultMinPartitions)

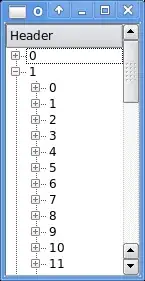

var rddwithPath = text.asInstanceOf[HadoopRDD[LongWritable, Text]].mapPartitionsWithInputSplit { (inputSplit, iterator) ⇒

val file = inputSplit.asInstanceOf[FileSplit]

iterator.map { tpl ⇒ (file.getPath.toString, tpl._2.toString) }

}.reduceByKey((a,b) => a)

In this way how can I use PDF and Xml files