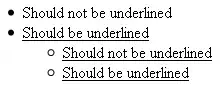

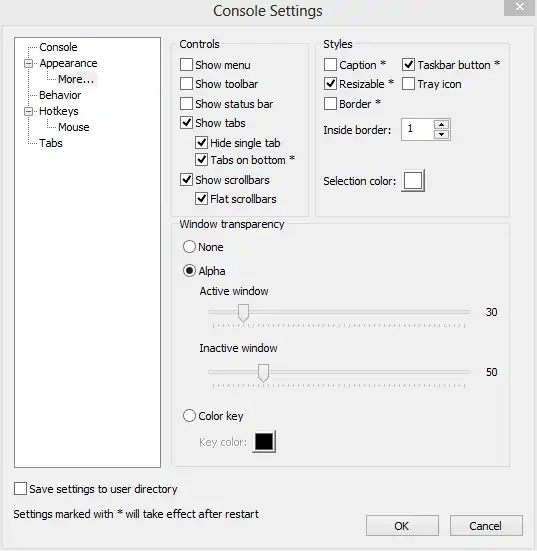

I am trying to get the corners of the box in image. Following are example images, their threshold results and on the right after the arrow are the results that I need. You might have seen these images before too on slack because I am using these images for my example questions on slack.

Following is the code that allows me reach till the middle image.

import cv2

import numpy as np

img_file = 'C:/Users/box.jpg'

img = cv2.imread(img_file, cv2.IMREAD_COLOR)

img = cv2.blur(img, (5, 5))

hsv = cv2.cvtColor(img, cv2.COLOR_BGR2HSV)

h, s, v = cv2.split(hsv)

thresh0 = cv2.adaptiveThreshold(s, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV, 11, 2)

thresh1 = cv2.adaptiveThreshold(v, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV, 11, 2)

thresh2 = cv2.adaptiveThreshold(v, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV, 11, 2)

thresh = cv2.bitwise_or(thresh0, thresh1)

cv2.imshow('Image-thresh0', thresh0)

cv2.waitKey(0)

cv2.imshow('Image-thresh1', thresh1)

cv2.waitKey(0)

cv2.imshow('Image-thresh2', thresh2)

cv2.waitKey(0)

Is there any method in opencv that can do it for me. I tried dilation cv2.dilate() and erosion cv2.erode() but it doesn't work in my cases.Or if not then what could be alternative ways of doing it ?

Thanks

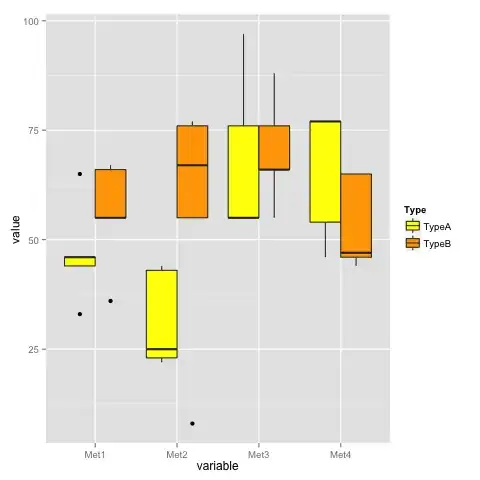

Canny version of the image ... On the left with low threshold and on the right with high threshold