If you are working with Keras library and want to use tensorboard to print your graphs of accuracy and other variables, Then below are the steps to follow.

step 1: Initialize the keras callback library to import tensorboard by using below command

from keras.callbacks import TensorBoard

step 2: Include the below command in your program just before "model.fit()" command.

tensor_board = TensorBoard(log_dir='./Graph', histogram_freq=0, write_graph=True, write_images=True)

Note: Use "./graph". It will generate the graph folder in your current working directory, avoid using "/graph".

step 3: Include Tensorboard callback in "model.fit()".The sample is given below.

model.fit(X_train,y_train, batch_size=batch_size, epochs=nb_epoch, verbose=1, validation_split=0.2,callbacks=[tensor_board])

step 4 : Run your code and check whether your graph folder is there in your working directory. if the above codes work correctly you will have "Graph"

folder in your working directory.

step 5 : Open Terminal in your working directory and type the command below.

tensorboard --logdir ./Graph

step 6: Now open your web browser and enter the address below.

http://localhost:6006

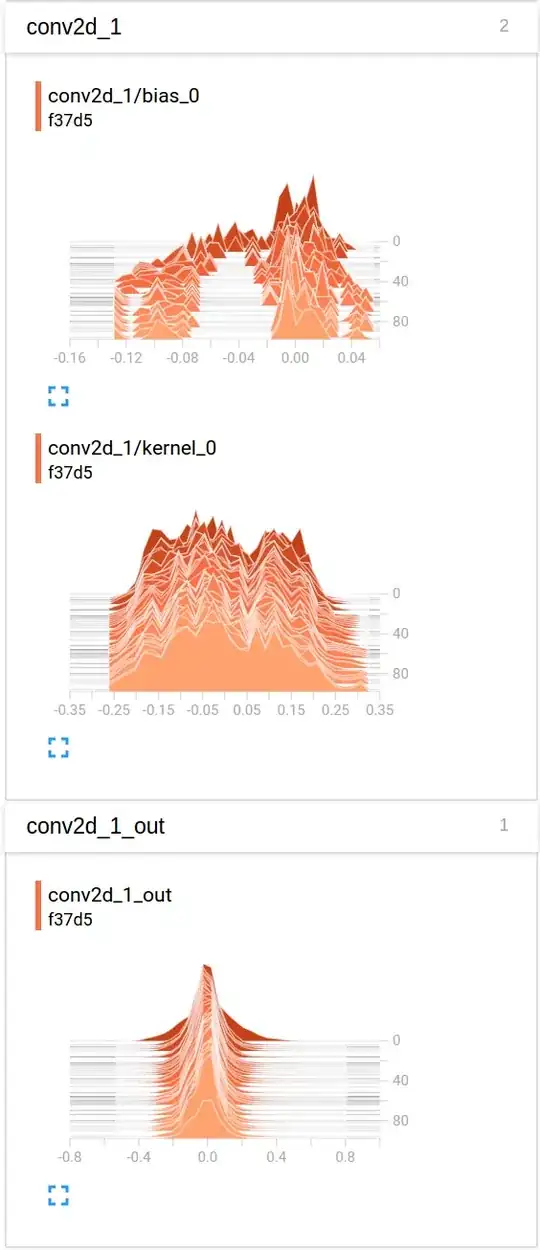

After entering, the Tensorbaord page will open where you can see your graphs of different variables.