My idea would be to look for features that do not occur in normal text - which might be vertical, black elements spanning multiple lines. My tool of choice is ImageMagick and it is installed on most Linux distros and is available for macOS and Windows. I would just run it in the Terminal at the command prompt.

So, I would use this command - note that I added the original page to the left of the processed page on the right and put a red border around just for illustration:

magick page-28.png -alpha off +dither -colors 2 -colorspace gray -normalize -statistic median 1x200 result.png

And I get this:

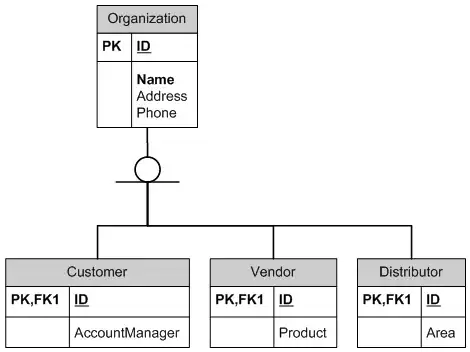

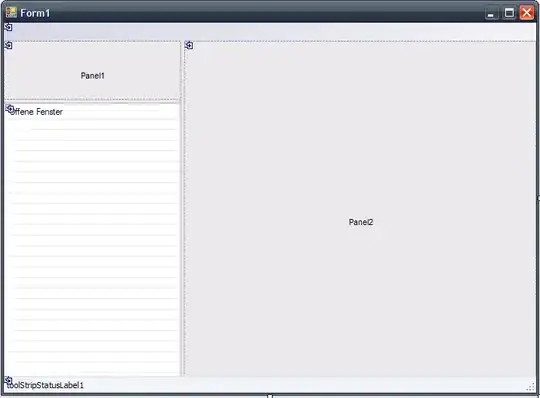

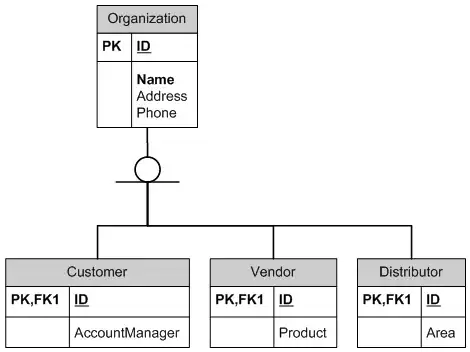

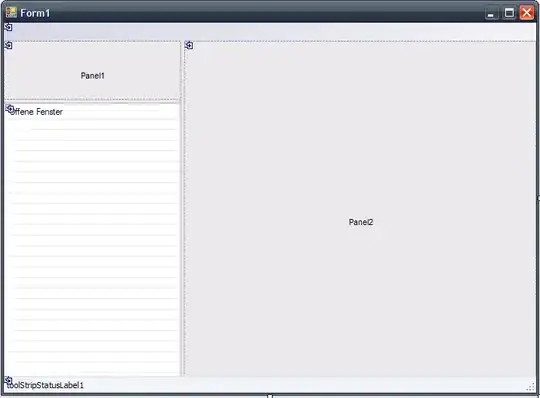

page-25.png

page-26.png

page-27.png

page-28.png

Explanation of command above...

In the above command, rather than thresholding, I am doing a colour reduction to 2 colours followed by a conversion to greyscale and then normalisation - basically that should choose black and the background colour as the two colours and they will become black and white when converted to greyscale and normalised.

I am then doing a median filter with a 200 pixel tall structuring element which is taller than a few lines - so it should identify tall features - vertical lines.

Explanation over

Carrying on...

So, if I invert the image so black becomes white and white becomes black, and then take the mean and multiply it by the total number of pixels in the image, that will tell me how many pixels are part of vertical features:

convert page-28.png -alpha off +dither -colors 2 -colorspace gray -normalize -statistic median 1x200 -negate -format "%[fx:mean*w*h]" info:

90224

convert page-27.png -alpha off +dither -colors 2 -colorspace gray -normalize -statistic median 1x200 -negate -format "%[fx:mean*w*h]" info:

0

So page 28 is not pure text and page 27 is.

Here are some tips...

Tip

You can see how many pages there are in a PDF, like this - though there are probably faster methods:

convert -density 18 book.pdf info:

Tip

You can extract a page of a PDF like this:

convert -density 288 book.pdf[25] page-25.png

Tip

If you are doing multiple books, you will probably want to normalise the images so that they are all, say, 1000 pixels tall then the size of the structuring element (for calculating the median) should be fairly consistent.