Thanks for taking the time to read this. I am modifying my OpenGL application to use GLSL shaders, its purpose is to visualize 3D atomistic models, including tetrahedra (and other polyhedra) using GL_TRIANGLES.

Note: in the examples bellow, colors and alpha channels are identicals.

Before I had:

void triangle (GLFloat * va, GLFloat * vb, GLFloat * vc)

{

glVertex3fv (va);

glVertex3fv (vb);

glVertex3fv (vc);

}

void tetra (GLFloat ** xyz)

{

// xyz Contains the coordinates of the triangles

// ie. the 4 peaks of the tetrahedra

// bellow 'get_normal' compute the normals

glBegin (GL_TRIANGLES);

glNormal3fv (get_normal(xyz[0], xyz[1], xyz[2]));

triangle (xyz[0], xyz[1], xyz[2]);

glNormal3fv (get_normal(xyz[1], xyz[2], xyz[3]));

triangle (xyz[1], xyz[2], xyz[3]);

glNormal3fv (get_normal(xyz[2], xyz[3], xyz[0]));

triangle (xyz[2], xyz[3], xyz[0]);

glNormal3fv (get_normal(xyz[0], xyz[3], xyz[1]));

triangle (xyz[0], xyz[3], xyz[1]);

glEnd ();

}

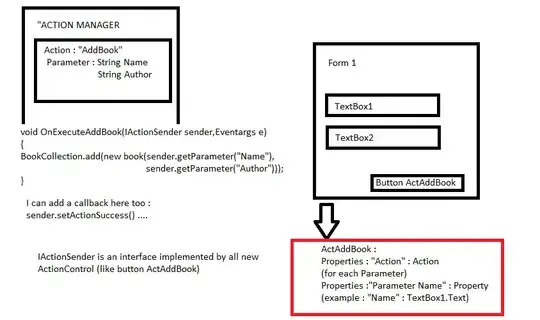

That displayed (the proper result):

Then I modified the code to use the following shaders:

#define GLSL(src) "#version 130\n" #src

const GLchar * vertex = GLSL(

uniform mat4 viewMatrix;

uniform mat4 projMatrix;

in vec3 position;

in float size;

in vec4 color;

out vec4 vert_color;

void main()

{

vert_color = color;

gl_Position = projMatrix * viewMatrix * vec4(position, 1.0);

}

);

const GLchar * colors = GLSL(

in vec4 vert_color;

out vec4 vertex_color;

void main()

{

vertex_color = vert_color;

}

);

And now I got:

So obviously something is wrong with the colors/lighting, and I whish I could understand how to correct this, I think that I have to use geometry shaders but I do not know where to find the information to now how/what to do, thanks in advance for your help.