I am facing a mind boggling (to me) issue while trying to read ORC files. By default the Hive orc files are in "UTF-8" encoding, or at least supposed to be. I am doing a copyToLocal of the ORC files and trying to read the ORC file in Java.

I am able to read the file successfully although it has some unwanted characters:

When I am querying the table in hive, no unwanted characters:

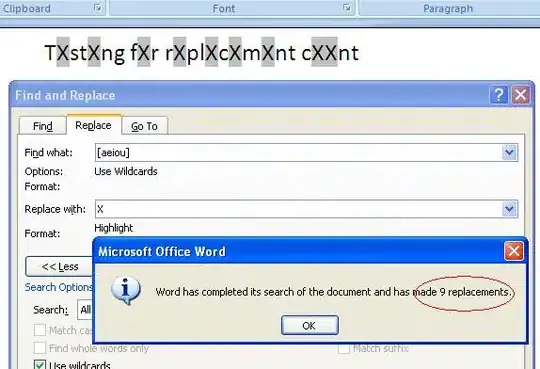

Can anyone please help? I have tried decoding and encoding in various formats like (ISO-8859-1 to UTF-8),(UTF-8 to ISO-8859-1),(ISO-8859-1 to UTF-16) etc.

Edit:

Hi, I am using the below java code to read ORC file:

import org.apache.hadoop.hive.ql.io.orc.Reader;

import org.apache.hadoop.hive.ql.io.orc.RecordReader;

import org.apache.hadoop.hive.serde2.objectinspector.StructField;

import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector;

public class OrcFormat {

public static void main(String[] argv)

{

System.out.println(System.getProperty("file.encoding").toString());

System.out.println(Charset.defaultCharset().name());

try {

Configuration conf = new Configuration();

Utils.createFile("C:/path/target","opfile.txt","UTF-8");

Reader reader = OrcFile.createReader(new Path("C:/input/000000_0"),OrcFile.readerOptions(conf));

StructObjectInspector inspector = (StructObjectInspector)reader.getObjectInspector();

List<String> keys = reader.getMetadataKeys();

for(int i=0;i<keys.size();i++){

System.out.println("Key:"+keys.get(i)+",Value:"+reader.getMetadataValue(keys.get(i)));

}

RecordReader records = reader.rows();

Object row = null;

List fields = inspector.getAllStructFieldRefs();

for(int i = 0; i < fields.size(); ++i) {

System.out.print(((StructField)fields.get(i)).getFieldObjectInspector().getTypeName() + '\t');

}

System.out.println();

int rCnt=0;

while(records.hasNext())

{

row = records.next(row);

List value_lst = inspector.getStructFieldsDataAsList(row);

String out = "";

for(Object field : value_lst) {

if(field != null)

out+=field;

out+="\t";

}

rCnt++;

out = out+"\n";

byte[] outA = convertEncoding(out,"UTF-8","UTF-8");

Utils.writeToFile(outA,"C:/path/target","opfile.txt","UTF-8");

if(rCnt<10){

System.out.println(out);

System.out.println(new String(outA));

}else{

break;

}

}

}catch (Exception e)

{

e.printStackTrace();

}

}

public static byte[] convertEncoding(String s,String inCharset,String outCharset){

Charset inC = Charset.forName(inCharset);

Charset outC = Charset.forName(outCharset);

ByteBuffer inpBuffer = ByteBuffer.wrap(s.getBytes());

CharBuffer data = inC.decode(inpBuffer);

ByteBuffer opBuffer = outC.encode(data);

byte[] opData = opBuffer.array();

return opData;

}

}