In the application, there are about 1 billion of png images (size 1024*1024 and about 1MB each), it needs combining the 1 billion images to a huge image, then produces a size 1024*1024 unitary thumbnail for it. Or maybe we don't need to really combine the images to a huge one, but just do some magic algorithm to produce the unitary thumbnail in the computer memory? Meanwhile this process needs to be done as fast as possible, better in seconds, or at least in a few minutes. Does anyone have idea?

-

2US billion (`10**9`) or EU billion (`10**12`)? – alk Feb 14 '17 at 07:48

-

A mean a billion of, a huge quantity. – Suge Feb 14 '17 at 07:50

-

What do you mean by *unitary thumbnail* and for what purpose? – user694733 Feb 14 '17 at 07:52

-

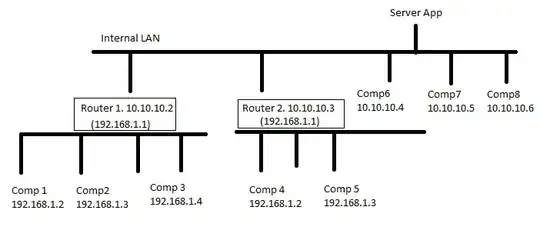

Like the image I attached in the post, the png images should be sliced to one huge image, then, I want the thumbnail of the huge image. – Suge Feb 14 '17 at 07:54

-

That is obvious, but you have not defined what you mean by thumbnail; there is a difference between 16x16 image vs 99999999x999999999 image. You also have not defined what you mean by fast (milliseconds vs seconds vs minutes vs hours). Boundaries of your question are unclear and can have a huge impact on how to do things. – user694733 Feb 14 '17 at 08:01

-

@user694733, thank you for reminding, I have added the missing informations, the thumbnail should be size 1024*1024, and the process time should be less than a few minutes. – Suge Feb 14 '17 at 08:05

-

2Note that the size of your thumbnail, 1024*1024, is roughly a *million* pixels. Using a *billion* images to produce this, means that each original image will contribute about a 1/1000th of a pixel in the thumbnail... The thumbnail is unlikely to visualise any meaningful information. Are you sure this is what you want? – Harald K Feb 14 '17 at 12:32

-

@haraldK, your opinion is rational, if I want a thumbnail size 1024000 * 1024000, any good idea to produce it? – Suge Feb 14 '17 at 12:36

3 Answers

The idea of loading a billion images into a single montage process is ridiculous. Your question is unclear, but your approach should be to determine how many pixels each original image will amount to in your final image, then extract the necessary number of pixels from each image in parallel. Then assemble those pixels into a final image.

So, if each image will be represented by one pixel in your final image, you need to get the mean of each image which you can do like this:

convert image1.png image2.png ... -format "%[fx:mean.r],%[fx:mean.g],%[fx:mean.b]:%f\n" info:

Sample Output

0.423529,0.996078,0:image1.png

0.0262457,0,0:image2.png

You can do that then very fast in parallel with GNU Parallel, using something like

find . -name \*.png -print0 | parallel -0 convert {} -format "%[fx:mean.r],%[fx:mean.g],%[fx:mean.b]:%f\n" info:

Then you can make a final image and put the individual pixels in.

Scanning even 1,000,000 PNG files is likely to take many hours...

You don't say how big your images are, but if they are of the order of 1MB each, and you have 1,000,000,000 then you need to do a petabyte of I/O to read them, so even with a 500MB/s ultra-fast SSD, you will be there 23 days.

- 191,897

- 31

- 273

- 432

-

If the tile images will be uploaded from different clients, is it a good idea to produce the represent pixel on the client before uploading, then composite the pixels to image on the server? Will this way be very fast? – Suge Feb 14 '17 at 10:50

-

1The answer depends on your environment which, unfortunately, I cannot understand with the current description. If there are 1,000,000,000 clients each sending you an image, it would make sense for each one to send you the minimum necessary. If there are only 1024 clients each providing 1,000,000 images, it would make sense for each client to work out a whole block for you but you can't do that if each client only sends one picture. In general, the more machines you can get working on the individual parts, the better. – Mark Setchell Feb 14 '17 at 10:54

-

2May I respectfully suggest you edit your question and improve it so folk don't have to guess and waste their time covering cases that may not be relevant or working out answers based on false assumptions arising from a poor description... – Mark Setchell Feb 14 '17 at 10:56

-

ImageMagick can do that:

montage -tile *.png tiled.png

If you don't want to use an external helper for whatever reason, you can still use the sources.

- 3,286

- 1

- 19

- 28

-

It's very very slow for big quantity of images in my testing, any suggestion? – Suge Feb 14 '17 at 09:35

-

So on a cluster of machines, using montage (with -resize) would allow images to be grouped together. So to achieve your goals, you would firstly create a set of nxn jobs, and run those, then on the generated montages, repeat until you are left with a single montage. – mksteve Mar 07 '17 at 08:17

Randomized algorithm such as random-sampling may be feasible.

Considering the combined image is so large, any linear algorithm may fail, not to mention higher complexity method.

By calculations, we can infer each thumbnail pixel depend on 1000 image. So a single sampling residual does not affect the result much.

The algorithm description may as follow:

For each thumbnail pixel coordinate, randomly choose N images which on the correspond location, and each image sampling M pixels and then calculate their average value. Do the same thing for other thumbnail pixels.

However, if your images are randomly combined, the result is tend to be a 0.5 valued grayscale image. Because by the Central Limit Theorem, the variance of thumbnail image pixel tend to be zero. So you have ensure the combined thumbnail is structured itself.

PS: using OpenCV would be a good choice

- 31

- 2