As we known, WaveFront (AMD OpenCL) is very similar to WARP (CUDA): http://research.cs.wisc.edu/multifacet/papers/isca14-channels.pdf

GPGPU languages, like OpenCL™ and CUDA, are called SIMT because they map the programmer’s view of a thread to a SIMD lane. Threads executing on the same SIMD unit in lockstep are called a wavefront (warp in CUDA).

Also known, that AMD suggested us the (Reduce) addition of numbers using a local memory. And for accelerating of addition (Reduce) suggests using vector types: http://amd-dev.wpengine.netdna-cdn.com/wordpress/media/2013/01/AMD_OpenCL_Tutorial_SAAHPC2010.pdf

But are there any optimized register-to-register data-exchage instructions between items (threads) in WaveFront:

such as

int __shfl_down(int var, unsigned int delta, int width=warpSize);in WARP (CUDA): https://devblogs.nvidia.com/parallelforall/faster-parallel-reductions-kepler/or such as

__m128i _mm_shuffle_epi8(__m128i a, __m128i b);SIMD-lanes on x86_64: https://software.intel.com/en-us/node/524215

This shuffle-instruction can, for example, execute Reduce (add up the numbers) of 8 elements from 8 threads/lanes, for 3 cycles without any synchronizations and without using any cache/local/shared-memory (which has ~3 cycles latency for each access).

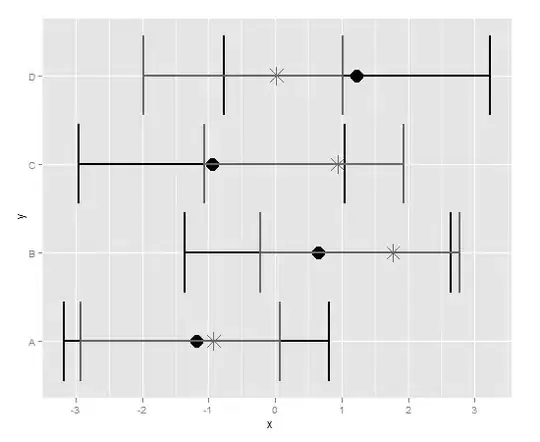

I.e. threads sends its value directly to register of other threads: https://devblogs.nvidia.com/parallelforall/faster-parallel-reductions-kepler/

Or in OpenCL we can use only instruction gentypen shuffle( gentypem x, ugentypen mask ) which can be used only for vector-types such as float16/uint16 into each item (thread), but not between items (threads) in WaveFront: https://www.khronos.org/registry/OpenCL/sdk/1.1/docs/man/xhtml/shuffle.html

Can we use something looks like shuffle() for reg-to-reg data-exchange between items (threads) in WaveFront which more faster than data-echange via Local memory?

Are there in AMD OpenCL instructions for register-to-register data-exchange intra-WaveFront such as instructions __any(), __all(), __ballot(), __shfl() for intra-WARP(CUDA): http://on-demand.gputechconf.com/gtc/2015/presentation/S5151-Elmar-Westphal.pdf

Warp vote functions:

__any(predicate)returns non-zero if any of the predicates for the threads in the warp returns non-zero__all(predicate)returns non-zero if all of the predicates for the threads in the warp returns non-zero__ballot(predicate)returns a bit-mask with the respective bits of threads set where predicate returns non-zero__shfl(value, thread)returns value from the requested thread (but only if this thread also performed a __shfl()-operation)

CONCLUSION:

As known, in OpenCL-2.0 there is Sub-groups with SIMD execution model akin to WaveFronts: Does the official OpenCL 2.2 standard support the WaveFront?

For Sub-Group there are - page-160: http://amd-dev.wpengine.netdna-cdn.com/wordpress/media/2013/12/AMD_OpenCL_Programming_User_Guide2.pdf

int sub_group_all(int predicate)the same as CUDA-__all(predicate)int sub_group_any(int predicate);the same as CUDA-__any(predicate)

But in OpenCL there is no similar functions:

- CUDA-

__ballot(predicate) - CUDA-

__shfl(value, thread)

There is only Intel-specified built-in shuffle functions in Version 4, August 28, 2016 Final Draft OpenCL Extension #35: intel_sub_group_shuffle, intel_sub_group_shuffle_down, intel_sub_group_shuffle_down, intel_sub_group_shuffle_up: https://www.khronos.org/registry/OpenCL/extensions/intel/cl_intel_subgroups.txt

Also in OpenCL there are functions, which usually implemented by shuffle-functions, but there are not all of functions which can be implemented by using shuffle-functions:

<gentype> sub_group_broadcast( <gentype> x, uint sub_group_local_id );<gentype> sub_group_reduce_<op>( <gentype> x );<gentype> sub_group_scan_exclusive_<op>( <gentype> x );<gentype> sub_group_scan_inclusive_<op>( <gentype> x );

Summary:

shuffle-functions remain more flexible functions , and ensure the fastest possible communication between threads with direct register-to-register data-exchanging.But functions

sub_group_broadcast/_reduce/_scandoesn't guarantee direct register-to-register data-exchanging, and these sub-group-functions less flexible.