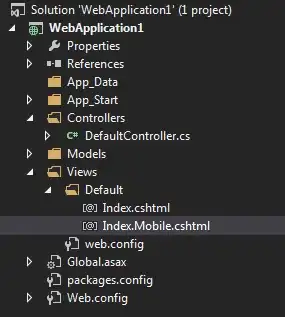

I'm encountering a difficulty when using NLTK corpora (in particular stop words) in AWS Lambda. I'm aware that the corpora need to be downloaded and have done so with NLTK.download('stopwords') and included them in the zip file used to upload the lambda modules in nltk_data/corpora/stopwords.

The usage in the code is as follows:

from nltk.corpus import stopwords

stopwords = stopwords.words('english')

nltk.data.path.append("/nltk_data")

This returns the following error from the Lambda log output

module initialization error:

**********************************************************************

Resource u'corpora/stopwords' not found. Please use the NLTK

Downloader to obtain the resource: >>> nltk.download()

Searched in:

- '/home/sbx_user1062/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- '/nltk_data'

**********************************************************************

I have also tried to load the data directly by including

nltk.data.load("/nltk_data/corpora/stopwords/english")

Which yields a different error below

module initialization error: Could not determine format for file:///stopwords/english based on its file

extension; use the "format" argument to specify the format explicitly.

It's possible that it has a problem loading the data from the Lambda zip and needs it stored externally.. say on S3, but that seems a bit strange.

Any idea what format the

Does anyone know where I could be going wrong?