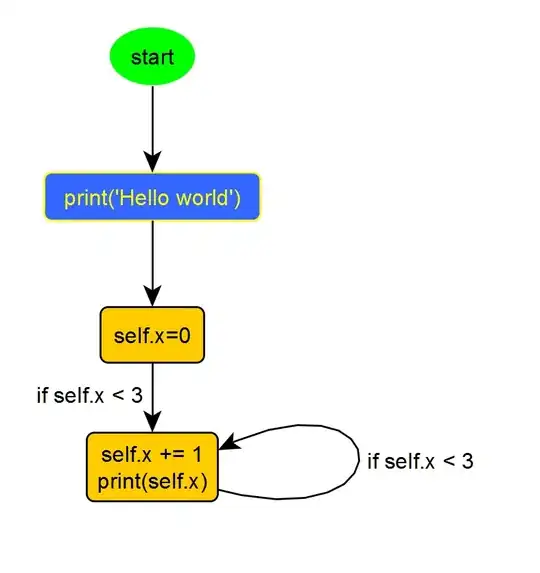

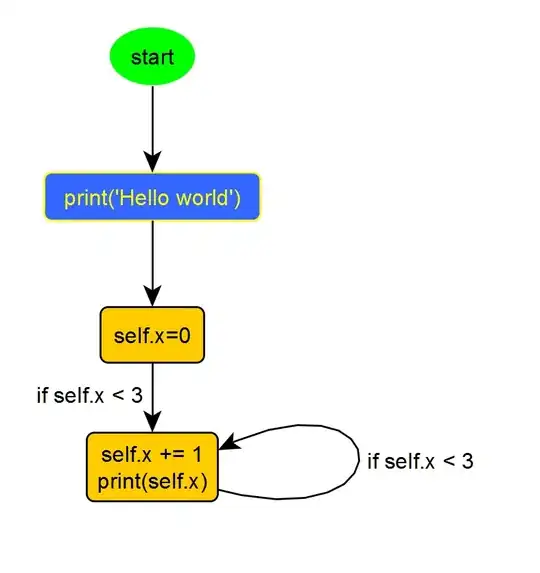

session.run(model) will initialize variable each time

That is correct. The issue is that each time x = x + 1 a new addition is created in the graph, which explains the results you obtain.

Graph after the first iteration:

After the second iteration:

After the third iteration:

After the fourth iteration:

After the fifth iteration:

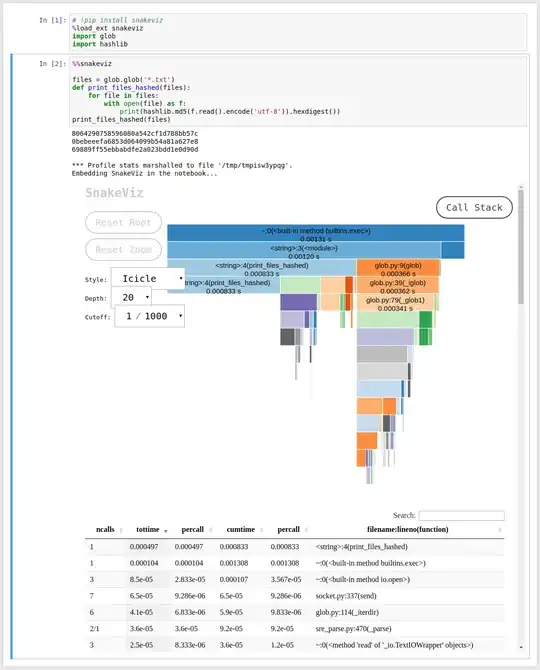

The code I use, mostly taken from Yaroslav Bulatov's answer in How can I list all Tensorflow variables a node depends on?:

import tensorflow as tf

import matplotlib.pyplot as plt

import networkx as nx

def children(op):

return set(op for out in op.outputs for op in out.consumers())

def get_graph():

"""Creates dictionary {node: {child1, child2, ..},..} for current

TensorFlow graph. Result is compatible with networkx/toposort"""

ops = tf.get_default_graph().get_operations()

return {op: children(op) for op in ops}

def plot_graph(G):

'''Plot a DAG using NetworkX'''

def mapping(node):

return node.name

G = nx.DiGraph(G)

nx.relabel_nodes(G, mapping, copy=False)

nx.draw(G, cmap = plt.get_cmap('jet'), with_labels = True)

plt.show()

x = tf.Variable(0, name='x')

model = tf.global_variables_initializer()

with tf.Session() as session:

for i in range(5):

session.run(model)

x = x + 1

print(session.run(x))

plot_graph(get_graph())