I am not the sharpest tool in the shed so please bear with me. I have successfully imported a CSV into SQL Server 2016. Unfortunately the CSV had all these pipe characters in it. I couldn't get rid of these pipe characters and now need your help. I must either find a way to import the CSV while omitting the pipes or find a way to get rid of the pipes that are in my table in SQL.

Here is what the website I got the CSV from had to say about these pipe characters:

The major Open Data tables are provided in a non-standard format that allows dirty data to be imported as we are provided some raw data fields that can contain formatting and other unprintable characters that choke many data systems. In this bulk data, text fields are surrounded by the pipe character (ascii 124). Date and numeric fields are not. Commas separate all fields.

Here is the query I used to import the CSV:

USE [open secrets]

CREATE TABLE cands16 (

[Cycle] [char](6) NOT NULL,

[FECCandID] [char] (11) NOT NULL,

[CID] [char] (11) NULL,

[FirstLastP] [varchar] (52) NULL,

[Party] [char] (30) NULL,

[DistIDRunFor] [varchar] (600) NULL,

[DistIDCurr] [varchar] (600) NULL,

[CurrCand] [char] (30) NULL,

[CycleCand] [char] (30) NULL,

[CRPICO] [char] (30) NULL,

[RecipCode] [char] (5) NULL,

[NoPacs] [varchar] (200) NULL

) ON [PRIMARY]

BULK

INSERT cands16

FROM 'C:\aaa open secrets\CampaignFin16\Cands16.txt'

WITH

(

FIELDTERMINATOR = ',',

ROWTERMINATOR = '\n'

)

GO

Here is a baby version of my CSV:

|2016|,|H4GA02060|,|N00035294|,|Greg Duke (R)|,|R|,|GA02|,| |,|Y|,|Y|,|C|,|RC|,| |

|2016|,|H4GA02078|,|N00036257|,|Vivian Childs (R)|,|R|,|GA02|,| |,| |,| |,| |,|RN|,| |

|2016|,|H4GA04116|,|N00035798|,|Thomas Brown (D)|,|D|,|GA04|,| |,| |,| |,| |,|DN|,| |

|2016|,|H4GA04124|,|N00035862|,|Thomas Wight (D)|,|D|,|GA07|,| |,| |,| |,| |,|DN|,| |

|2016|,|H4GA06087|,|N00026160|,|Tom Price (R)|,|R|,|GA06|,|GA06|,|Y|,|Y|,|I|,|RW|,| |

|2016|,|H4GA08067|,|N00026163|,|Lynn A Westmoreland (R)|,|R|,|GA03|,|GA03|,| |,|Y|,|I|,|RI|,| |

|2016|,|H4GA09065|,|N00036258|,|Bernard Fontaine (R)|,|R|,|GA09|,| |,| |,|Y|,|C|,|RL|,| |

|2016|,|H4GA10071|,|N00035370|,|Mike Collins (R)|,|R|,|GA10|,| |,| |,| |,| |,|RN|,| |

|2016|,|H4GA11046|,|N00035321|,|Susan Davis (R)|,|R|,|GA11|,| |,| |,| |,| |,|RN|,| |

|2016|,|H4GA11053|,|N00002526|,|Bob Barr (R)|,|R|,|GA11|,| |,| |,| |,| |,|RN|,| |

|2016|,|H4GA11061|,|N00035347|,|Barry Loudermilk (R)|,|R|,|GA11|,|GA11|,|Y|,|Y|,|I|,|RW|,| |

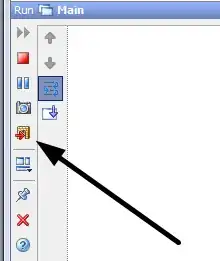

Here is a picture of what my table looks like: