I'm currently trying to submit a job training on Google Cloud ML with the Facenet (a Tensorflow library for face recognition). I'm currently trying this (link is here) part of the library where it does the training for the model.

Going to Google Cloud ML, I'm following this tutorial (link is here) where it teaches you how to submit a training.

I was able to successfully submit a job training to Google Cloud ML but there was an error. Here are some pictures of the errors:

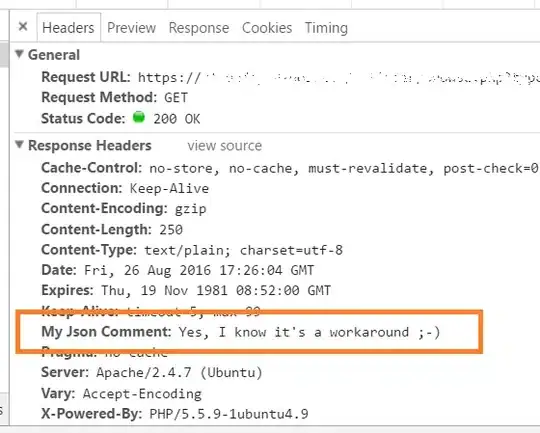

And here's an error from the Google Cloud Jobs logs

Here are more detailed pictures on Google Cloud Job logs

Submitting a job request was a success and it was even waiting for Tensorflow to start but right after that there's that error.

The commands I used to run this is here:

gcloud ml-engine jobs submit training facetraining_test4 \

--package-path=/Users/myname/Documents/projects/tf-projects/facenet/src/ \

--module-name=/Users/myname/Documents/projects/tf-projects/facenet/src/facenet_train_classifier.py \

--staging-bucket=gs://facenet-training-test \

--region=asia-east1 \

--config=/Users/myname/Documents/projects/tf-projects/facenet/none_config.yml \

-- \

--logs_base_dir=/Users/myname/Documents/projects/tf-projects/logs/facenet/ \

--models_base_dir=/Users/myname/Documents/projects/tf-projects/models/facenet/ \

--data_dir=/Users/myname/Documents/projects/tf-projects/facenet_datasets/employee_dataset/employee/employee_maxpy_mtcnnpy_182/ \

--image_size=160 \

--model_def=models.inception_resnet_v1 \

--lfw_dir=/Users/myname/Documents/projects/tf-projects/facenet_datasets/lfw/lfw_mtcnnpy_160/ \

--optimizer=RMSPROP \

--learning_rate -1 \

--max_nrof_epochs=80 \

--keep_probability=0.8 \

--learning_rate_schedule_file=/Users/myname/Documents/projects/tf-projects/facenet/data/learning_rate_schedule_classifier_casia.txt \

--weight_decay=5e-5 \

--center_loss_factor=1e-4 \

Any suggestions on how to fix this? Thanks!