Do you think there is room for optimizations in the function haswon (see below)?

I recognized that changing the argument type from __int64 to unsigned __int64 made the function faster, thus i thougt maybe there is still a chance for optimization.

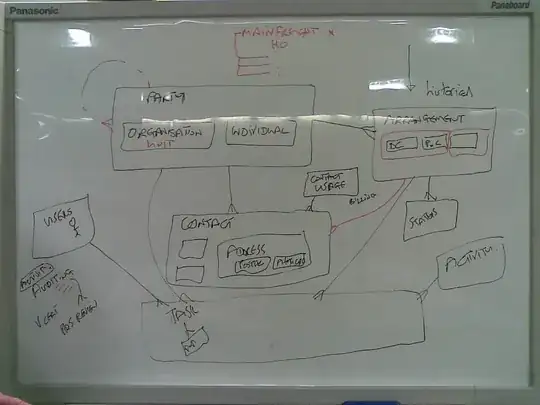

In more detail: I am writing a connect four game. Recently i used the Profiler Very Sleepy and recognized that the function haswon uses much of the cpu-time. The function uses a bitboard-representation of the connect-four-board for one player. The function itself i found in the sources of the fourstones benchmark. The bitboard representation is following:

. . . . . . . TOP

5 12 19 26 33 40 47

4 11 18 25 32 39 46

3 10 17 24 31 38 45

2 9 16 23 30 37 44

1 8 15 22 29 36 43

0 7 14 21 28 35 42 BOTTOM

The function:

// return whether newboard includes a win

bool haswon(unsigned __int64 newboard)

{

unsigned __int64 y = newboard & (newboard >> 6);

if (y & (y >> 2 * 6)) // check \ diagonal

return true;

y = newboard & (newboard >> 7);

if (y & (y >> 2 * 7)) // check horizontal -

return true;

y = newboard & (newboard >> 8);

if (y & (y >> 2 * 8)) // check / diagonal

return true;

y = newboard & (newboard >> 1);

if (y & (y >> 2)) // check vertical |

return true;

return false;

}

Thanks!

Edit: CPU is x86, 32 Bit Architecture, i'm using the Compiler from the Visual Studio 2008 Express Edition. Optimization Flags are /O2 /Oi /GL.

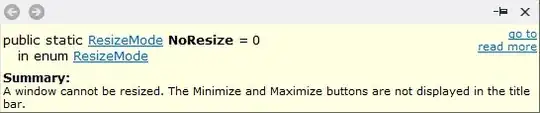

I tried the function haswon2 which Ben Jackson suggested. The assemblies from the Microsoft Compiler, with the default optimization flags for release versions (/O2 /Oi /GL), showing nearly no runtime differences. It looks like that the VC-Compiler in comparison to gcc can not take advantage that it must not evaluate each condition in strict order.

Results:

haswon original:

haswon2 from Ben Jackson:

Edit2: Assembly of haswon:

00401A10 mov eax,dword ptr [esp+4]

00401A14 mov ecx,dword ptr [esp+8]

00401A18 push ebx

00401A19 push esi

00401A1A push edi

00401A1B mov edx,eax

00401A1D mov edi,ecx

00401A1F shrd edx,edi,6

00401A23 mov esi,edx

00401A25 shr edi,6

00401A28 and esi,eax

00401A2A and edi,ecx

00401A2C mov edx,esi

00401A2E mov ebx,edi

00401A30 shrd edx,ebx,0Ch

00401A34 shr ebx,0Ch

00401A37 and edx,esi

00401A39 and ebx,edi

00401A3B or edx,ebx

00401A3D je `anonymous namespace'::haswon+35h (401A45h)

00401A3F mov al,1

00401A41 pop edi

00401A42 pop esi

00401A43 pop ebx

00401A44 ret

00401A45 mov edx,eax

00401A47 mov edi,ecx

00401A49 shrd edx,edi,7

00401A4D mov esi,edx

00401A4F shr edi,7

00401A52 and esi,eax

00401A54 and edi,ecx

00401A56 mov edx,esi

00401A58 mov ebx,edi

00401A5A shrd edx,ebx,0Eh

00401A5E shr ebx,0Eh

00401A61 and edx,esi

00401A63 and ebx,edi

00401A65 or edx,ebx

00401A67 jne `anonymous namespace'::haswon+2Fh (401A3Fh)

00401A69 mov edx,eax

00401A6B mov edi,ecx

00401A6D shrd edx,edi,8

00401A71 mov esi,edx

00401A73 shr edi,8

00401A76 and esi,eax

00401A78 and edi,ecx

00401A7A mov edx,esi

00401A7C mov ebx,edi

00401A7E shrd edx,ebx,10h

00401A82 shr ebx,10h

00401A85 and edx,esi

00401A87 and ebx,edi

00401A89 or edx,ebx

00401A8B jne `anonymous namespace'::haswon+2Fh (401A3Fh)

00401A8D mov edx,eax

00401A8F mov esi,ecx

00401A91 shrd edx,esi,1

00401A95 shr esi,1

00401A97 and esi,ecx

00401A99 and edx,eax

00401A9B mov eax,edx

00401A9D mov ecx,esi

00401A9F shrd eax,ecx,2

00401AA3 shr ecx,2

00401AA6 and eax,edx

00401AA8 and ecx,esi

00401AAA or eax,ecx

00401AAC jne `anonymous namespace'::haswon+2Fh (401A3Fh)

00401AAE pop edi

00401AAF pop esi

00401AB0 xor al,al

00401AB2 pop ebx

00401AB3 ret