Is it possible to check how many documents remain in the corpus after applying prune_vocabulary in the text2vec package?

Here is an example for getting a dataset in and pruning vocabulary

library(text2vec)

library(data.table)

library(tm)

#Load movie review dataset

data("movie_review")

setDT(movie_review)

setkey(movie_review, id)

set.seed(2016L)

#Tokenize

prep_fun = tolower

tok_fun = word_tokenizer

it_train = itoken(movie_review$review,

preprocessor = prep_fun,

tokenizer = tok_fun,

ids = movie_review$id,

progressbar = FALSE)

#Generate vocabulary

vocab = create_vocabulary(it_train

, stopwords = tm::stopwords())

#Prune vocabulary

#How do I ascertain how many documents got kicked out of my training set because of the pruning criteria?

pruned_vocab = prune_vocabulary(vocab,

term_count_min = 10,

doc_proportion_max = 0.5,

doc_proportion_min = 0.001)

# create document term matrix with new pruned vocabulary vectorizer

vectorizer = vocab_vectorizer(pruned_vocab)

dtm_train = create_dtm(it_train, vectorizer)

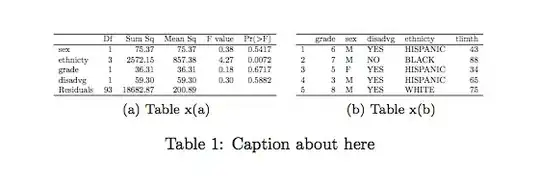

Is there an easy way to understand how aggressive the term_count_min and doc_proportion_min parameters are being on my text corpus. I am trying to do something similar to how stm package lets us handle this using a plotRemoved function which produces a plot like this: