As worded, this is not mathematically possible. Let D = S - T. Your constraint that T <= S means S - T = D >= 0. Since S and T are normally distributed, so is D because linear combinations of normals are normal. You can't have a normal distribution with a finite lower bound. Consequently, you can't simultaneously meet requirements of normality for S and T and meet your constraint.

You can construct non-normal solutions by generating T with any distribution of your choice, generating D independently with a strictly positive distribution (such as gamma, Weibull, uniform(0,?), truncated normal,...), and creating S = T + D. Since expectations and variances of independent random variables sum, you can get your desired mean and s.d. for both T and S by tuning the parameterization of D appropriately. The results can even look pretty bell-shaped, but strictly speaking won't be normal.

Since variances of independent random variables are additive and must be positive, S = T + D only works if the variance of S is larger than the variance of T. The more general solution is to generate whichever of S and T has the smaller variance. If it's T, add D to get S. If it's S, subtract D to get T.

Since you said in comments that approximations are okay, here's an example. Suppose you want the smaller distribution to have a μsmaller = 10 and σsmaller = 3, and the larger to have μlarger = 15 and σlarger = 5. Then the difference between them should have a strictly positive distribution with μdelta = 5 and σdelta = 4 (σlarger = sqrt(32 + 42) = 5). I chose a gamma distribution for delta, parameterized to have the desired mean and standard deviation. Here it is in Python:

import random

alpha = 25.0 / 16.0

beta = 16.0 / 5.0

for _ in range(100000):

smaller = random.gauss(10, 3)

delta = random.gammavariate(alpha, beta)

print(smaller, delta, smaller + delta)

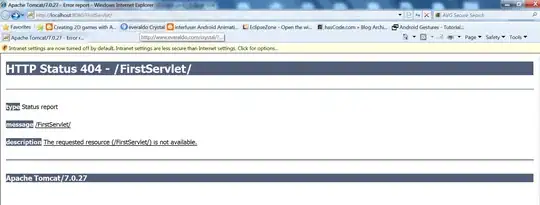

I saved the results to a file, and imported them into JMP. Here's a snapshot of my analysis:

As you can see, smaller and larger have the desired means and standard deviations. You can also confirm that all of the deltas are positive, so larger is always greater than smaller. Finally, the normal q-q plot above larger's histogram shows that the result, while unimodal and roughly bell-shaped, is not normal because the plotted points don't fall along a straight line.

Another answer has proposed matching the two distributions by generating a single random uniform and using it as the input for inversion with both CDFs:

q = random()

t = inverseCDF(q, mu_T, sd_T)

s = inverseCDF(q, mu_S, sd_S)

This is a well-known correlation induction strategy called "Common Random Numbers" in which q is the same quantile being used to generate both distributions via inversion. With symmetric distributions, such as the normal, this produces a correlation of 1 between T and S. A correlation of 1 tells us that (either) one is a linear transformation of the other.

In fact, there's a simpler way to accomplish this for normals without having to do two inversions. Generate one of T or S, by whatever mechanism you wish—inversion, polar method, Ziggurat method, etc. Then use the standard transformation to convert it to a standard normal, and from there to the other normal. If we let T be the normal that we generate directly, then

S = (σS / σT) * (T - μT) + μS.

We would like to have T <= S for all possible quantiles to meet the objectives of the original problem. So under what circumstances can we have S < T? Since S is a function of T, that implies

(σT/σT) * (T - μT) + μS < T

which after some algebra becomes

T * (σS - σT) / σS < μT - μS * (σT/σS).

This reduces to 3 cases.

σS = σT: In this case, T gets eliminated and the originally desired outcome of T <= S is achieved as long as μT <= μS.

σS > σT: In this case, T > S when T < (μT * σS / (σS - σT)) - (μS * σT / (σS - σT)).

σS < σT: T > S when T > (μT * σS / (σS - σT)) - (μS * σT / (σS - σT)) because of the sign flip induced in the result in #2 by having (σS - σT) < 0.

Bottom line - the only case in which the correlation induction scheme works is when the variances of the two distributions are equal. Unequal variances will result in outcomes where T > S.

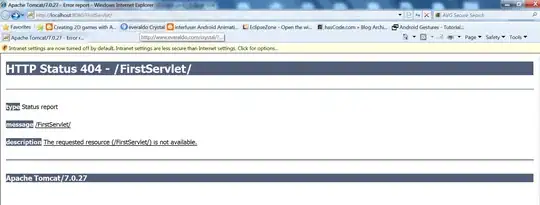

The following picture may give some intuition. The red curve is a standard normal with mean 0 and standard deviation 1. The green curve is a normal with mean 1 and standard deviation 2. We can see that because the green curve is wider, there is some quantile below which it produces smaller outcomes than the red. If T, the "lower" distribution, had the larger variability there would be some quantile above which it would produce larger outcomes.