I am learning myself openCL in Java using the jogamp jocl libraries. One of my tests is ceating a Mandelbrot map. I have four tests: simple serial, parallel using the Java executor interface, openCL for a single device and openCL for multiple devices. The first three are ok, the last one not. When I compare the (correct) output of the multiple device with the incorrect output of the multiple device solution I notice that the colors are about the same but that the output of the last one is garbled. I think I understand where the problem resides but I can't solve it.

The trouble is (imho) in the fact that openCL uses vector buffers and that I have to translate the output into a matrix. I think that this translation is incorrect. I parallize the code by dividing the mandelbrot map into rectangles where the width (xSize) is divided by the number of tasks and the height (ySize) is preserved. I think I am able to transmit that info correct into the kernel, but translating it back is incorrect.

CLMultiContext mc = CLMultiContext.create (deviceList);

try

{

CLSimpleContextFactory factory = CLQueueContextFactory.createSimple (programSource);

CLCommandQueuePool<CLSimpleQueueContext> pool = CLCommandQueuePool.create (factory, mc);

IntBuffer dataC = Buffers.newDirectIntBuffer (xSize * ySize);

IntBuffer subBufferC = null;

int tasksPerQueue = 16;

int taskCount = pool.getSize () * tasksPerQueue;

int sliceWidth = xSize / taskCount;

int sliceSize = sliceWidth * ySize;

int bufferSize = sliceSize * taskCount;

double sliceX = (pXMax - pXMin) / (double) taskCount;

String kernelName = "Mandelbrot";

out.println ("sliceSize: " + sliceSize);

out.println ("sliceWidth: " + sliceWidth);

out.println ("sS*h:" + sliceWidth * ySize);

List<CLTestTask> tasks = new ArrayList<CLTestTask> (taskCount);

for (int i = 0; i < taskCount; i++)

{

subBufferC = Buffers.slice (dataC, i * sliceSize, sliceSize);

tasks.add (new CLTestTask (kernelName, i, sliceWidth, xSize, ySize, maxIterations,

pXMin + i * sliceX, pYMin, xStep, yStep, subBufferC));

} // for

pool.invokeAll (tasks);

// submit blocking immediately

for (CLTestTask task: tasks) pool.submit (task).get ();

// Ready read the buffer into the frequencies matrix

// according to me this is the part that goes wrong

int w = taskCount * sliceWidth;

for (int tc = 0; tc < taskCount; tc++)

{

int offset = tc * sliceWidth;

for (int y = 0; y < ySize; y++)

{

for (int x = offset; x < offset + sliceWidth; x++)

{

frequencies [y][x] = dataC.get (y * w + x);

} // for

} // for

} // for

pool.release();

The last loop is the culprit, meaning that there is (i think) a mismatch between the kernel encoding and host translation. The kernel:

kernel void Mandelbrot

(

const int width,

const int height,

const int maxIterations,

const double x0,

const double y0,

const double stepX,

const double stepY,

global int *output

)

{

unsigned ix = get_global_id (0);

unsigned iy = get_global_id (1);

if (ix >= width) return;

if (iy >= height) return;

double r = x0 + ix * stepX;

double i = y0 + iy * stepY;

double x = 0;

double y = 0;

double magnitudeSquared = 0;

int iteration = 0;

while (magnitudeSquared < 4 && iteration < maxIterations)

{

double x2 = x*x;

double y2 = y*y;

y = 2 * x * y + i;

x = x2 - y2 + r;

magnitudeSquared = x2+y2;

iteration++;

}

output [iy * width + ix] = iteration;

}

The last statement encodes the information into the vector. This kernel is used by the single device version as well. The only difference is that in the multi device version I changed the width and x0. As you can see in the Java code I transmit xSize / number_of_tasks as width and pXMin + i * sliceX as x0 (instead of pXMin).

I am working at it for several days now and have removed quite some bugs, but I am not able to see anymore what I am doing wrong now. Help is greatly appreciated.

Edit 1

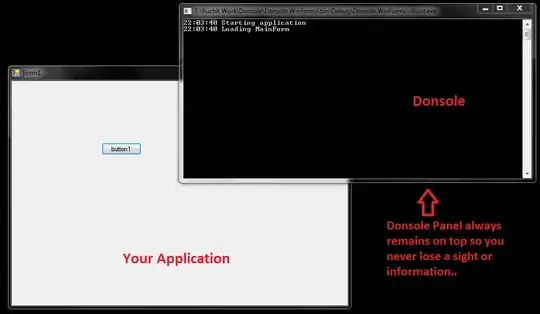

@Huseyin asked for an image. First screenshot computed by openCL single device.

Second screenshot is the multi device version, computed with exactly the same parameters.

Edit 2

There was a question about how I enqueue the buffers. As yoy can see in the code above I have a list<CLTestTask> to which I add the tasks and in which the buffer is enqueued. CLTestTask is an inner class of which you can find the code below.

final class CLTestTask implements CLTask { CLBuffer clBufferC = null; Buffer bufferSliceC; String kernelName; int index; int sliceWidth; int width; int height; int maxIterations; double pXMin; double pYMin; double x_step; double y_step;

public CLTestTask

(

String kernelName,

int index,

int sliceWidth,

int width,

int height,

int maxIterations,

double pXMin,

double pYMin,

double x_step,

double y_step,

Buffer bufferSliceC

)

{

this.index = index;

this.sliceWidth = sliceWidth;

this.width = width;

this.height = height;

this.maxIterations = maxIterations;

this.pXMin = pXMin;

this.pYMin = pYMin;

this.x_step = x_step;

this.y_step = y_step;

this.kernelName = kernelName;

this.bufferSliceC = bufferSliceC;

} /*** CLTestTask ***/

public Buffer execute (final CLSimpleQueueContext qc)

{

final CLCommandQueue queue = qc.getQueue ();

final CLContext context = qc.getCLContext ();

final CLKernel kernel = qc.getKernel (kernelName);

clBufferC = context.createBuffer (bufferSliceC);

out.println (pXMin + " " + sliceWidth);

kernel

.putArg (sliceWidth)

.putArg (height)

.putArg (maxIterations)

.putArg (pXMin) // + index * x_step)

.putArg (pYMin)

.putArg (x_step)

.putArg (y_step)

.putArg (clBufferC)

.rewind ();

queue

.put2DRangeKernel (kernel, 0, 0, sliceWidth, height, 0, 0)

.putReadBuffer (clBufferC, true);

return clBufferC.getBuffer ();

} /*** execute ***/

} /*** Inner Class: CLTestTask ***/