I am trying to implement CNN by Theano. I used Keras library. My data set is 55 alphabet images, 28x28.

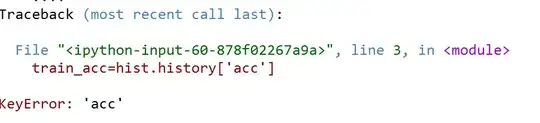

In the last part I get this error:

train_acc=hist.history['acc']

KeyError: 'acc'

Any help would be much appreciated. Thanks.

This is part of my code:

from keras.models import Sequential

from keras.models import Model

from keras.layers.core import Dense, Dropout, Activation, Flatten

from keras.layers.convolutional import Convolution2D, MaxPooling2D

from keras.optimizers import SGD, RMSprop, adam

from keras.utils import np_utils

import matplotlib

import matplotlib.pyplot as plt

import matplotlib.cm as cm

from urllib.request import urlretrieve

import pickle

import os

import gzip

import numpy as np

import theano

import lasagne

from lasagne import layers

from lasagne.updates import nesterov_momentum

from nolearn.lasagne import NeuralNet

from nolearn.lasagne import visualize

from sklearn.metrics import classification_report

from sklearn.metrics import confusion_matrix

from PIL import Image

import PIL.Image

#from Image import *

import webbrowser

from numpy import *

from sklearn.utils import shuffle

from sklearn.cross_validation import train_test_split

from tkinter import *

from tkinter.ttk import *

import tkinter

from keras import backend as K

K.set_image_dim_ordering('th')

%%%%%%%%%%

batch_size = 10

# number of output classes

nb_classes = 6

# number of epochs to train

nb_epoch = 5

# input iag dimensions

img_rows, img_clos = 28,28

# number of channels

img_channels = 3

# number of convolutional filters to use

nb_filters = 32

# number of convolutional filters to use

nb_pool = 2

# convolution kernel size

nb_conv = 3

%%%%%%%%

model = Sequential()

model.add(Convolution2D(nb_filters, nb_conv, nb_conv,

border_mode='valid',

input_shape=(1, img_rows, img_clos)))

convout1 = Activation('relu')

model.add(convout1)

model.add(Convolution2D(nb_filters, nb_conv, nb_conv))

convout2 = Activation('relu')

model.add(convout2)

model.add(MaxPooling2D(pool_size=(nb_pool, nb_pool)))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(128))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(nb_classes))

model.add(Activation('softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adadelta')

%%%%%%%%%%%%

hist = model.fit(X_train, Y_train, batch_size=batch_size, nb_epoch=nb_epoch,

show_accuracy=True, verbose=1, validation_data=(X_test, Y_test))

hist = model.fit(X_train, Y_train, batch_size=batch_size, nb_epoch=nb_epoch,

show_accuracy=True, verbose=1, validation_split=0.2)

%%%%%%%%%%%%%%

train_loss=hist.history['loss']

val_loss=hist.history['val_loss']

train_acc=hist.history['acc']

val_acc=hist.history['val_acc']

xc=range(nb_epoch)

#xc=range(on_epoch_end)

plt.figure(1,figsize=(7,5))

plt.plot(xc,train_loss)

plt.plot(xc,val_loss)

plt.xlabel('num of Epochs')

plt.ylabel('loss')

plt.title('train_loss vs val_loss')

plt.grid(True)

plt.legend(['train','val'])

print (plt.style.available) # use bmh, classic,ggplot for big pictures

plt.style.use(['classic'])

plt.figure(2,figsize=(7,5))

plt.plot(xc,train_acc)

plt.plot(xc,val_acc)

plt.xlabel('num of Epochs')

plt.ylabel('accuracy')

plt.title('train_acc vs val_acc')

plt.grid(True)

plt.legend(['train','val'],loc=4)

#print plt.style.available # use bmh, classic,ggplot for big pictures

plt.style.use(['classic'])