I have about 1000 sensors outputting data during the day. Each sensor outputs about 100,000 points per day. When I query the data I am only interested in getting data from a given sensor on a given day. I don t do any cross sensor queries. The timeseries are unevenly spaced and I need to keep the time resolution so I cannot do things like arrays of 1 point per second.

I plan to store data over many years. I wonder which scheme is the best:

- each day/sensor pair corresponds to one collection, thus adding 1000 collections of about 100,000 documents each per day to my db

- each sensor corresponds to a collection. I have a fixed number of 1000 collections that grow every day by about 100,000 documents each.

1 seems to intuitively be faster for querying. I am using mongoDb 3.4 which has no limit for the number of collections in a db.

2 seems cleaner but I am afraid the collections will become huge and that querying will gradually become slower as each collection grows

I am favoring 1 but I might be wrong. Any advice?

Update:

I followed the advice of

https://bluxte.net/musings/2015/01/21/efficient-storage-non-periodic-time-series-mongodb/

Instead of storing one document per measurement, I have a document containing 128 measurement,startDate,nextDate. It reduces the number of documents and thus the index size but I am still not sure how to organize the collections.

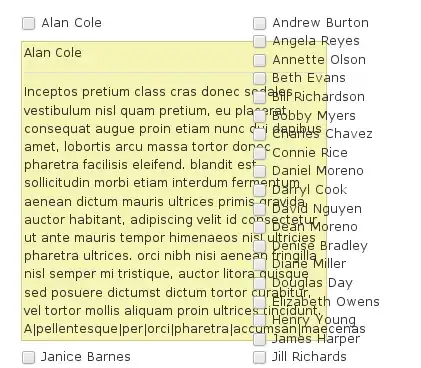

When I query data, I just want the data for a (date,sensor) pair, that is why I thought 1 might speed up the reads. I currently have about 20,000 collections in my DB and when I query the list of all collections, it takes ages which makes me think that it is not a good idea to have so many collections.

What do you think?