I have now multiple times stumbled upon that fitting in python with scipy.curve_fit is somehow a lot harder than with other tools like e.g. ROOT (https://root.cern.ch/)

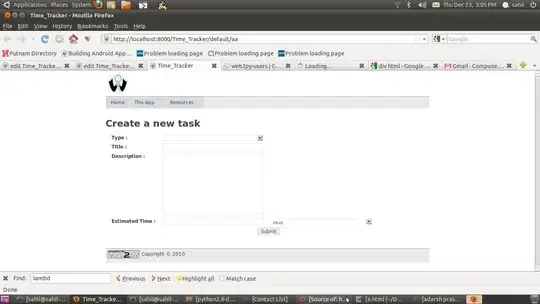

For example, when fitting a gaussian, with scipy I mostly get a straight line:

corresponding code:

def fit_gauss(y, x = None):

n = len(y) # the number of data

if x is None:

x = np.arange(0,n,1)

mean = y.mean()

sigma = y.std()

def gauss(x, a, x0, sigma):

return a * np.exp(-(x - x0) ** 2 / (2 * sigma ** 2))

popt, pcov = curve_fit(gauss, x, y, p0=[max(y), mean, sigma])

plt.plot(x, y, 'b+:', label='data')

plt.plot(x, gauss(x, *popt), 'ro:', label='fit')

plt.legend()

plt.title('Gauss fit for spot')

plt.xlabel('Pixel (px)')

plt.ylabel('Intensity (a.u.)')

plt.show()

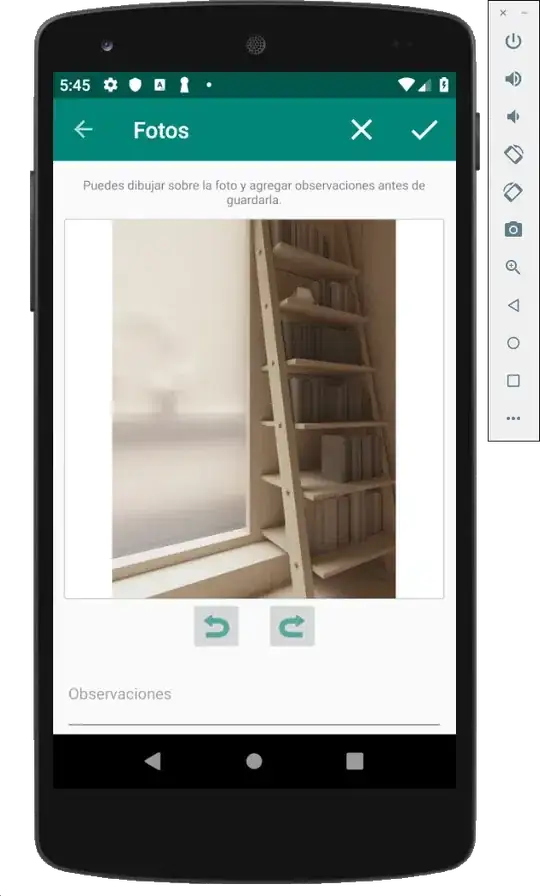

Using ROOT, I get a perfect fit, without even giving start parameters:

Again, the corresponding code:

import ROOT

import numpy as np

y = np.array([2., 2., 11., 0., 5., 7., 18., 12., 19., 20., 36., 11., 21., 8., 13., 14., 8., 3., 21., 0., 24., 0., 12., 0., 8., 11., 18., 0., 9., 21., 17., 21., 28., 36., 51., 36., 47., 69., 78., 73., 52., 81., 96., 71., 92., 70., 84.,72., 88., 82., 106., 101., 88., 74., 94., 80., 83., 70., 78., 85., 85., 56., 59., 56., 73., 33., 49., 50., 40., 22., 37., 26., 6., 11., 7., 26., 0., 3., 0., 0., 0., 0., 0., 3., 9., 0., 31., 0., 11., 0., 8., 0., 9., 18.,9., 14., 0., 0., 6., 0.])

x = np.arange(0,len(y),1)

#yerr= np.array([0.1,0.2,0.1,0.2,0.2])

graph = ROOT.TGraphErrors()

for i in range(len(y)):

graph.SetPoint(i, x[i], y[i])

#graph.SetPointError(i, yerr[i], yerr[i])

func = ROOT.TF1("Name", "gaus")

graph.Fit(func)

canvas = ROOT.TCanvas("name", "title", 1024, 768)

graph.GetXaxis().SetTitle("x") # set x-axis title

graph.GetYaxis().SetTitle("y") # set y-axis title

graph.Draw("AP")

Can someone explain to me, why the results differ that much? Is the implementation in scipy that bad / dependend on good start parameters? Is there any way around it? I need to process a lot of fits automatically, but don't have access to ROOT on the target computer, so it has to work with python only.

When taking the results from the ROOT fit and giving them to scipy as the start parameters, the fit works fine with scipy as well...