I am currently working on doing phoneme recognition with cnn.

My dataset is labeled, but I am bit unsure how i ensure that the length of feature vector also will be according to the length of the audio file.

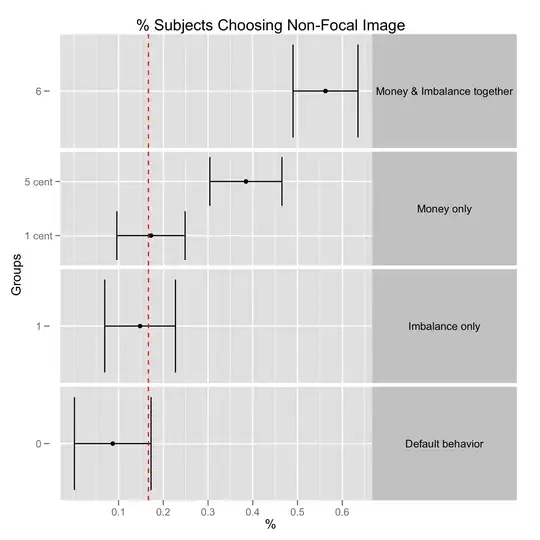

My input to the CNN is currently a spectogram visualisation of mel-log filter energies, where the y-axis are the different frequency bands, and the x-axis is the contains the frame.

For the given example above is the sentence:

fmjc-b-an118 RUBOUT J L Y Z TWO

And phonemes:

RUBOUT: R AH B AW T

J: JH EY

L: EH L

Y: W AY

Z: Z IY

TWO: T UW

In total 15 phonemes in 249 frames. Nearly 17 frames pr. each phoneme.

is the text/word spoken to it :

fbbh-b-an90 NO

NO: N OW

In total 2 phonemes in 97 frames = 49 frames per phoneme.

So how can i create an input shape that captures number phonemes a audio file would have?

Edit:

The only way i think I think it is possible to recreate the input/output relationship is to provide an input shape that is one frame, but will the system be able to detect the different classes of phoneme in the that short time span, and still say "None" if none is available?

This would require the output shape contain the classes for each frame, which require me to know the duration of each phoneme which should be possible with this.

But again is it possible to detect a phoneme given one frame?