If I want to make the following work on Windows, what is the correct locale and how do I detect that it is actually present: Does this code work universaly, or is it just my system?

4 Answers

Although there isn't good support for named locales, Visual Studio 2010 does include the UTF-8 conversion facets required by C++11: std::codecvt_utf8 for UCS2 and std::codecvt_utf8_utf16 for UTF-16:

#include <fstream>

#include <iostream>

#include <string>

#include <locale>

#include <codecvt>

void prepare_file()

{

// UTF-8 data

char utf8[] = {'\x7a', // latin small letter 'z' U+007a

'\xe6','\xb0','\xb4', // CJK ideograph "water" U+6c34

'\xf0','\x9d','\x84','\x8b'}; // musical sign segno U+1d10b

std::ofstream fout("text.txt");

fout.write(utf8, sizeof utf8);

}

void test_file_utf16()

{

std::wifstream fin("text.txt");

fin.imbue(std::locale(fin.getloc(), new std::codecvt_utf8_utf16<wchar_t>));

std::cout << "Read from file using UTF-8/UTF-16 codecvt\n";

for(wchar_t c; fin >> c; )

std::cout << std::hex << std::showbase << c << '\n';

}

void test_file_ucs2()

{

std::wifstream fin("text.txt");

fin.imbue(std::locale(fin.getloc(), new std::codecvt_utf8<wchar_t>));

std::cout << "Read from file using UTF-8/UCS2 codecvt\n";

for(wchar_t c; fin >> c; )

std::cout << std::hex << std::showbase << c << '\n';

}

int main()

{

prepare_file();

test_file_utf16();

test_file_ucs2();

}

this outputs, on my Visual Studio 2010 EE SP1

Read from file using UTF-8/UTF-16 codecvt

0x7a

0x6c34

0xd834

0xdd0b

Read from file using UTF-8/UCS2 codecvt

0x7a

0x6c34

0xd10b

Press any key to continue . . .

- 46,567

- 13

- 103

- 169

In the past UTF-8 (and some other code pages) wasn't allowed as the system locale because

Microsoft said that a UTF-8 locale might break some functions as they were written to assume multibyte encodings used no more than 2 bytes per character, thus code pages with more bytes such as UTF-8 (and also GB 18030, cp54936) could not be set as the locale.

https://en.wikipedia.org/wiki/Unicode_in_Microsoft_Windows#UTF-8

However Microsoft has gradually introduced UTF-8 locale support and started recommending the ANSI APIs (-A) again instead of the Unicode (-W) versions like before

Until recently, Windows has emphasized "Unicode"

-Wvariants over-AAPIs. However, recent releases have used the ANSI code page and-AAPIs as a means to introduce UTF-8 support to apps. If the ANSI code page is configured for UTF-8,-AAPIs operate in UTF-8. This model has the benefit of supporting existing code built with-AAPIs without any code changes.-A vs. -W APIs

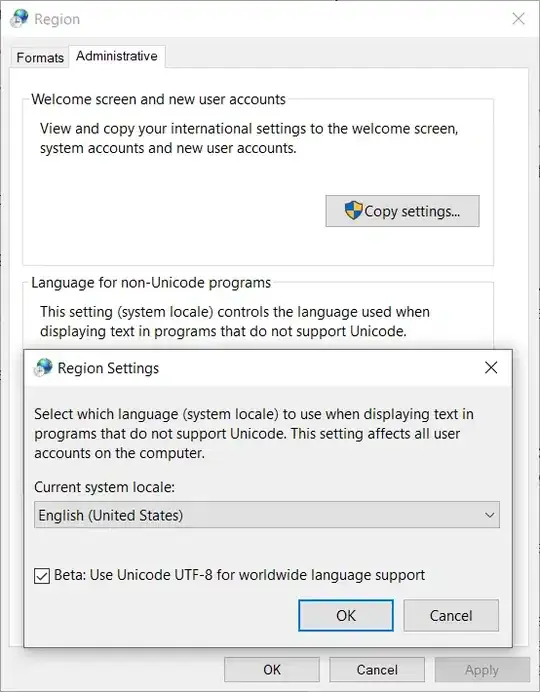

Firstly they added a "Beta: Use Unicode UTF-8 for worldwide language support" checkbox since Windows 10 insider build 17035 for setting the locale code page to UTF-8

To open that dialog box open start menu, type "region" and select Region settings > Additional date, time & regional settings > Change date, time, or number formats > Administrative

After enabling it you can call setlocal as normal:

Starting in Windows 10 build 17134 (April 2018 Update), the Universal C Runtime supports using a UTF-8 code page. This means that

charstrings passed to C runtime functions will expect strings in the UTF-8 encoding. To enable UTF-8 mode, use "UTF-8" as the code page when usingsetlocale. For example,setlocale(LC_ALL, ".utf8")will use the current default Windows ANSI code page (ACP) for the locale and UTF-8 for the code page.

You can also use this in older Windows versions

To use this feature on an OS prior to Windows 10, such as Windows 7, you must use app-local deployment or link statically using version 17134 of the Windows SDK or later. For Windows 10 operating systems prior to 17134, only static linking is supported.

Later in 2019 they added the ability for programs to use the UTF-8 locale without even setting the UTF-8 beta flag above. You can use the /execution-charset:utf-8 or /utf-8 options when compiling with MSVC or set the ActiveCodePage property in appxmanifest

- 37,963

- 15

- 156

- 475

-

A nice recap of the new feature! It's amazing it took them so long to say "let's just use utf-8 in the C strings". The `/utf-8` option seems to be unrelated with the checkbox though. It sets the execution and source charsets of the binary but I might be wrong. – vehsakul Sep 12 '20 at 23:47

Basically, you are out of luck: http://www.siao2.com/2007/01/03/1392379.aspx

- 1

- 1

- 24,346

- 3

- 50

- 88

-

Hmm, but how do Windows applications (I mean those written by Microsoft) work with UTF-8 if it's not a supported encoding? – Šimon Tóth Dec 02 '10 at 14:29

-

Windows internally uses UTF-16 for its API, so any UTF-8 text gets converted to UTF-16 before being passed to Windows API. Functions such as MultiByteToWideChar and WideCharToMultiByte recognize UTF-8 just fine. – Nemanja Trifunovic Dec 02 '10 at 15:11

-

-

1@Nemanja Trifunovic: that's precisely **not** the point of the blog. For every UTF-16 API like MessageBoxW, there's an "ANSI" variant MessageBoxA which will use the current "ANSI" code page to do the 8 bit to UTF-16 conversion. However, you can't use UTF-8 as the current "ANSI" code page. However, `MultiByteToWideChar` doesn't use the current ANSI code page. Its first argument is the code page to use, and _there_ UTF-8 (65001) is allowed. – MSalters Dec 02 '10 at 16:28

-

2@MSalters: The point of the blog is that code page 65001 cannot be an ACP, which means it cannot be used as a C++ standard library locale. The UTF-8 <> UTF-16 conversion I mention is not the point of the blog, but my answer to the question how to use UTF-8 on Windows. A shorter answer would be: don't use C++ Standard library for IO on Windows. – Nemanja Trifunovic Dec 02 '10 at 16:42

-

1@Let_Me_Be: Basically, this boils down to the fact that `wchar_t` on Windows is specifically defined as a 16-bit type. The C (and C++) standards require `wchar_t` to be able to hold *any* valid character from *all* supported encodings. But there is *no way* to encode all of the Unicode characters in 16 bits -- it just can't be done. Therefore, the Windows C and C++ libraries *do not* actually support Unicode of any kind. If you want to use Unicode on Windows, you have to go outside the C and C++ libraries. Yes, it's stupid, but what did you expect from Microsoft? :P – Dan Moulding Dec 02 '10 at 17:39

-

@Dan I actually don't care about the internal representation. This is a problem with external encoding. Correct reading and output. – Šimon Tóth Dec 02 '10 at 17:54

-

@Let_Me_Be: While you may not care about the internal representation, the C++ library most certainly does. In order for the C++ library to process UTF-8 encoded data, it must first *convert* the UTF-8 encoding to `wchar_t` (the specific encoding of which is implementation defined) which is used internally by the library. But because Windows's `whchar_t` is only 16-bits wide, the library cannot convert UTF-8 to `wchar_t` for it to use internally. It therefore cannot operate on UTF-8 encoded data, period. – Dan Moulding Dec 02 '10 at 18:25

-

@Dan What? So what you are claiming is that you can't convert UTF-8 into UTF-16? And how the heck does that work on Unix systems then (wchar_t is UTF-32 encoded on most Unix systems)? – Šimon Tóth Dec 02 '10 at 18:32

-

1@Let_Me_Be: You are confusing 16 bit `wchar_t` and UTF-16. They are not the same thing. UTF-8 and UTF-16 are two different ways of encoding all of the Unicode code points (of which there are *far* more than just 65535). You need at *least* 24 bits to represent all 1,000,000+ Unicode code points. The "16" in UTF-16 does *not* mean that all characters representable using only 16 bits (for instance some Unicode characters require 32 bits [two 16-bit *code units* ] when encoded using UTF-16). But the C++ library requires `wchar_t` to be able to uniquely represent *every* supported character. – Dan Moulding Dec 02 '10 at 18:43

-

(continued)... This is why nearly every other C and C++ library implementation uses a 32-bit `wchar_t` -- it must be *at least* 24-bits to be able to uniquely represent all Unicode characters. – Dan Moulding Dec 02 '10 at 18:49

-

@Dan No, I'm not confusing anything. wchar_t was USC-2 encoded on Windows 2000 and before and since then it is UTF-16 encoded. – Šimon Tóth Dec 02 '10 at 18:49

-

1@Dan http://stackoverflow.com/questions/4025458/how-does-microsoft-handle-the-fact-that-utf-16-is-a-variable-length-encoding-in-t – Šimon Tóth Dec 02 '10 at 18:50

-

@Let_Me_Be: Let me rephrase. You asked, "So what you are claiming is that you can't convert UTF-8 into UTF-16?" No, I'm claiming you can't convert UTF-8 to `wchar_t` on Windows. I suspect some confusion because of the way you phrased that question. – Dan Moulding Dec 02 '10 at 18:54

-

@Dan But converting UTF-8 to `wchar_t` on windows is a conversion from UTF-8 to UTF-16. So again, why the heck is this so impossible? And why is it possible with all other of the million encodings supported by Windows? – Šimon Tóth Dec 02 '10 at 18:56

-

1@Let_Me_Be: No, conversion to `wchar_t` is *not* the same as conversion to UTF-16. That's precisely what I'm trying to explain (although, probably not as well as I'd like). Some UTF-16 characters are going to need more than 16 bits to be able to represent them. `wchar_t`, being only 16-bits on Windows, can therefore not represent those characters. Again, the C standard requires that `wchar_t` be able to uniquely represent *every* supported character. – Dan Moulding Dec 02 '10 at 19:05

-

@Dan And I will again add that, yes it is conversion to UTF-16 since Microsoft is indeed using variable length encoding. See the link I posted. – Šimon Tóth Dec 02 '10 at 19:11

-

@Dan: Can you point to the exact place in the C standard where it says a wchar_t needs to be able to uniquely represent a single character? I know for a fact that the current C++ standard makes no such requirement. In fact, Let_Me_Be is correct in this regard: on Windows, wchar_t is de facto the type for storing UTF-16 code units (I am not saying characters), and of course it is possible to convert from UTF-16 <> UTF-8. What Windows does not support at all is UTF-32. – Nemanja Trifunovic Dec 02 '10 at 19:12

-

@Let_Me_Be: The problem is that variable length encoding is not allowed with `wchar_t`. There must be a *one-to-one* mapping between characters and `wchar_t` -- this is required by the C standard. If, on Windows, you give a `wchar_t` string to `wcslen`, and some of the characters in the string have been encoded using "variable length encoding" such as UTF-16, then `wcslen` is going to give you the wrong result. Again, this is because the standard requires that a single `wchar_t` be able to *uniquely represent* any character in all supported character sets. See 7.17(2) of the C99 standard. – Dan Moulding Dec 02 '10 at 22:35

-

1@Nemanja: 7.17(2) of C99 states, "[wchar_t] is an integer type whose range of values can represent distinct codes for all members of the largest extended character set specified among the supported locales". Therefore, if UTF-16 (or any Unicode encoding, for that matter) is supported by one of your locales, then `wchar_t` must be capable of distinctly representing over 1 million characters -- it cannot be only 16-bits wide. It is precisely because `wchar_t` is not wide enough on Windows that you cannot use a UTF-8 or UTF-16 locale with `ios::imbue`. – Dan Moulding Dec 02 '10 at 22:48

-

@Dan That doesn't change the fact that MSVC is using variable length encoding. Plus this is C++ not C (and even further MSVC doesn't and will not support C99). – Šimon Tóth Dec 02 '10 at 22:55

-

@Nemanja: I will add that using an array or vector of 16-bit `wchar_t` to contain UTF-16 encoded data is really no different from simply using a `char` array or vector to do the same. And yes, this can be done and is a valid way of dealing with UTF-8 encoded data. The Unix `cat` utility, for example, doesn't need to be rewritten in order to support UTF-8, even though it only uses `char` types and the corresponding functions. It works because `cat` doesn't need to analyze or manipulate the data; it just reads it in and spits it back out. Read http://goo.gl/wyr84 -- it's long but worth it. – Dan Moulding Dec 02 '10 at 22:59

-

2@Let_Me_Be: You are seriously confusing the container that holds data with the encoding of the data that it contains. You can store UTF-8 encoded data in an array of `char`, if you want. You can store UTF-16 in an array of `char`, too. But this doesn't mean that `char` is "variable length encoded". Same with `wchar_t`. It is just a container. And it is meant to contain exactly one fixed-size character per `wchar_t` (as specified in the standard). You can store variable length characters in it if you want, but it doesn't make `wchar_t` itself "variable length". – Dan Moulding Dec 02 '10 at 23:03

-

@Let_Me_Be: One last thing: I quoted the C99 standard because that's the version of the document that I happen to have on my computer. I looked up the C90 version and `wchar_t` is defined identically there. – Dan Moulding Dec 02 '10 at 23:17

-

@Dan MSVC is using UTF-16 encoding that means that that one `wchar_t` doesn't always represent one character, because for surrogate pairs, one character is represented by two `wchar_t`. It does break the C standard and the C library part of the C++ standard (C++ string containers are actually defined so loosely, that this is actually allowed). And again, whatever the standard says doesn't change the fact that this is how MSVC behaves (and will behave in the future, since this is something, that can't change easily). – Šimon Tóth Dec 03 '10 at 00:10

-

@Let_Me_Be: Just because you can store UTF-16 encoded data in a `wchar_t` doesn't mean that it will be interpreted as UTF-16 by the C or C++ libraries. And it in fact *won't*. This is why `wstring::length` will not correctly report the number of *characters* in the string, as it should, if your string is UTF-16 encoded. Or a simpler example: how could `iswalpha` (which takes a single `wchar_t`) possibly work if the character is variable-length encoded? It *can't*. – Dan Moulding Dec 03 '10 at 01:21

-

@Dan You still didn't read the question I linked, therefore this discussion is pointless. I will just again repeat that Microsoft is using UTF-16 variable length encoding for `wchar_t`. That's a fact. – Šimon Tóth Dec 03 '10 at 08:40

-

2@Let_Me_Be: I did read your linked question, but it seems that you didn't understand the correct answer there. Some (or even all) of the *Windows APIs* may interpret `wchar_t` strings as UTF-16 encoded data but *the C and C++ libraries* **do not**. If they did, then you could use UTF-8 as a locale for the C and C++ libraries. But, you can't. And that is the answer to your question here ;) – Dan Moulding Dec 03 '10 at 14:52

-

Again, the C and C++ libraries *cannot* interpret `wchar_t` as UTF-16 on Windows because **a)** it would violate the standard and **b)** some functions simply couldn't work (like `iswalpha`). On Windows, the C and C++ libraries interpret `wchar_t` as (old) UCS-2, not UTF-16. – Dan Moulding Dec 03 '10 at 14:55

-

@Dan So you are claiming that if you read (using wcin) into a `wchar_t`/`wstring` (plain C++ stuff) on Windows, it will contain UCS-2 encoded data? – Šimon Tóth Dec 04 '10 at 10:08

-

@Dan Just to make sure you are aware of it, some functions like `iswalpha` simply don't work on Windows. – Šimon Tóth Dec 04 '10 at 10:10

-

from https://blogs.msdn.microsoft.com/qingsongyao/2009/04/10/sql-server-and-utf-8-encoding-1-true-or-false/ About SQL Server, but things are near: SQL Server treats a UTF-16 supplementary character as two characters. The Len function return 2 instead of 1 for such input. SQL Server can sort/compare any defined Unicode characters in UTF-16 encoding. Note, not all code points are map to valid Unicode character. For example, The Unicode Standard, Version 5.1 defines code points for around 10,000 characters – Ivan Jun 24 '18 at 13:08

Per MSDN, it would be named "english_us.65001". But code page 65001 is somewhat flaky on Windows.

- 173,980

- 10

- 155

- 350

-

2

-

@Let_Me_Be: I can't summarize it better than http://www.google.com/search?q=site%3Ablogs.msdn.com+65001 – MSalters Dec 02 '10 at 12:32

-

1@MSalters I'm sorry but I just can't find anything both current and detailed enough. What I understand from the short blog posts I read is that Windows doesn't have UTF-8 support at all (which just doesn't make any sense). – Šimon Tóth Dec 02 '10 at 12:41

-

@Let_Me_Be: It doesn't have implicit support. You can't call `MessageBoxA("Hellö")`. However, it has explicit support: `MultiByteToWideChar(CP_UTF8, MB_ERR_INVALID_CHARS, utf8input.c_str(), ...` – MSalters Dec 02 '10 at 16:31

-

@MSalters OK, so since my code is explicitly converting into a UTF-8 locale, it should work (damn, I really need to install Windows somewhere so I can test this). – Šimon Tóth Dec 02 '10 at 16:37

-

7@Let_Me_Be: What all these answers try to say is that there is no utf-8 locale on windows. – Doub Mar 14 '11 at 13:58

-

1@Doub there wasn't, but now there is and MS actually recommended to use the UTF-8 locale for portability – phuclv Aug 18 '20 at 01:39