I am doing a benchmark about vectorization on MacOS with the following processor i7 :

$ sysctl -n machdep.cpu.brand_string

Intel(R) Core(TM) i7-4960HQ CPU @ 2.60GHz

My MacBook Pro is from middle 2014.

I tried to use different flag options for vectorization : the 3 ones that interest me are SSE, AVX and AVX2.

For my benchmark, I add each element of 2 arrays and store the sum in a third array.

I must make you notice that I am working with double type for these arrays.

Here are the functions used into my benchmark code :

1*) First with SSE vectorization :

#ifdef SSE

#include <x86intrin.h>

#define ALIGN 16

void addition_tab(int size, double *a, double *b, double *c)

{

int i;

// Main loop

for (i=size-1; i>=0; i-=2)

{

// Intrinsic SSE syntax

const __m128d x = _mm_load_pd(a); // Load two x elements

const __m128d y = _mm_load_pd(b); // Load two y elements

const __m128d sum = _mm_add_pd(x, y); // Compute two sum elements

_mm_store_pd(c, sum); // Store two sum elements

// Increment pointers by 2 since SSE vectorizes on 128 bits = 16 bytes = 2*sizeof(double)

a += 2;

b += 2;

c += 2;

}

}

#endif

2*) Second with AVX256 vectorization :

#ifdef AVX256

#include <immintrin.h>

#define ALIGN 32

void addition_tab(int size, double *a, double *b, double *c)

{

int i;

// Main loop

for (i=size-1; i>=0; i-=4)

{

// Intrinsic AVX syntax

const __m256d x = _mm256_load_pd(a); // Load two x elements

const __m256d y = _mm256_load_pd(b); // Load two y elements

const __m256d sum = _mm256_add_pd(x, y); // Compute two sum elements

_mm256_store_pd(c, sum); // Store two sum elements

// Increment pointers by 4 since AVX256 vectorizes on 256 bits = 32 bytes = 4*sizeof(double)

a += 4;

b += 4;

c += 4;

}

}

#endif

For SSE vectorization, I expect a Speedup equal around 2 because I align data on 128bits = 16 bytes = 2* sizeof(double).

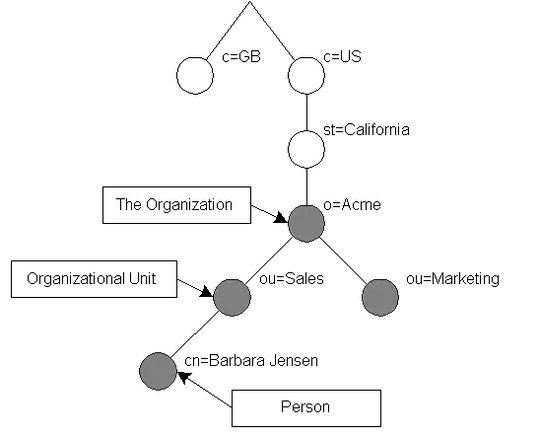

What I get in results for SSE vectorization is represented on the following figure :

So, I think these results are valid because SpeedUp is around factor 2.

Now for AVX256, I get the following figure :

For AVX256 vectorization, I expect a Speedup equal around 4 because I align data on 256bits = 32 bytes = 4* sizeof(double).

But as you can see, I still get a factor 2 and not 4 for SpeedUp.

I don't understand why I get the same results for Speedup with SSE and AVX vectorization.

Does it come from "compilation flags", from my model of processor, ... I don't know.

Here are the compilation command line that I have done for all above results :

For SSE :

gcc-mp-4.9 -DSSE -O3 -msse main_benchmark.c -o vectorizedExe

For AVX256 :

gcc-mp-4.9 -DAVX256 -O3 -Wa,-q -mavx main_benchmark.c -o vectorizedExe

Moreover, with my model of processor, could I use AVX512 vectorization ? (Once the issue of this question will be solved).

Thanks for your help

UPDATE 1

I tried the different options of @Mischa but still can't get a factor 4 for speedup with AVX flags and option. You can take a look at my C source on http://example.com/test_vectorization/main_benchmark.c.txt (with .txt extension for direct view into browser) and the shell script for benchmarking is http://example.com/test_vectorization/run_benchmark .

As said @Mischa, I try to apply the following commande line for compilation :

$GCC -O3 -Wa,-q -mavx -fprefetch-loop-arrays main_benchmark.c -o vectorizedExe

but code genereated has not AVX instructions.

if you could you take a look at these files, this would be great. Thanks.