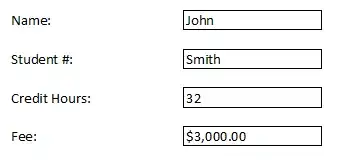

Consider I have the following a stabilized video frame where stabilization is done by only rotation and translation (no scaling):

As seen in the image, Right-hand side of the image is symmetric of the previous pixels, i.e the black region after rotation is filled with symmetry. I added a red line to indicate it more clearly.

I'd like to find the rotation angle which I will use later on. I could have done this via SURF or SIFT features, however, in real case scenario, I won't have the original frame.

I probably can find the angle by brute force but I wonder if there is any better and more elegant solution. Note that, the intensity value of the symmetric part is not precisely the same as the original part. I've checked some values, for example, upper right pixel of V character on the keyboard is [51 49 47] in original part but [50 50 47] in symmetric copy which means corresponding pixels are not guaranteed to be the same RGB value.

I'll implement this on Matlab or python and the video stabilization is done using ffmpeg.

EDIT: I only have stabilized video, don't have access to original video or files produced by ffmpeg.

Any help/suggestion is appreciated,