Here's a complete example that should get you on the right track. For the sake of simplicity, let us assume that your goal is that of classifying the pixels on the three-band image below into three different categories, namely building, vegetation and water. Those categories will be displayed in red, green and blue color, respectively.

We start off by reading the image and defining some variables that will be used later on.

import numpy as np

from skimage import io

img = io.imread('https://i.stack.imgur.com/TFOv7.png')

rows, cols, bands = img.shape

classes = {'building': 0, 'vegetation': 1, 'water': 2}

n_classes = len(classes)

palette = np.uint8([[255, 0, 0], [0, 255, 0], [0, 0, 255]])

Unsupervised classification

If you don't wish to manually label some pixels then you need to detect the underlying structure of your data, i.e. you have to split the image pixels into n_classes partitions, for example through k-means clustering:

from sklearn.cluster import KMeans

X = img.reshape(rows*cols, bands)

kmeans = KMeans(n_clusters=n_classes, random_state=3).fit(X)

unsupervised = kmeans.labels_.reshape(rows, cols)

io.imshow(palette[unsupervised])

Supervised classification

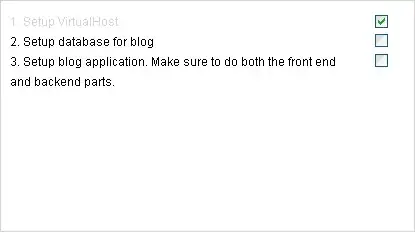

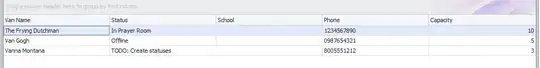

Alternatively, you could assign labels to some pixels of known class (the set of labeled pixels is usually referred to as ground truth). In this toy example the ground truth is made up of three hardcoded square regions of 20×20 pixels shown in the following figure:

supervised = n_classes*np.ones(shape=(rows, cols), dtype=np.int)

supervised[200:220, 150:170] = classes['building']

supervised[40:60, 40:60] = classes['vegetation']

supervised[100:120, 200:220] = classes['water']

The pixels of the ground truth (training set) are used to fit a support vector machine.

y = supervised.ravel()

train = np.flatnonzero(supervised < n_classes)

test = np.flatnonzero(supervised == n_classes)

from sklearn.svm import SVC

clf = SVC(gamma='auto')

clf.fit(X[train], y[train])

y[test] = clf.predict(X[test])

supervised = y.reshape(rows, cols)

io.imshow(palette[supervised])

After the training stage, the classifier assigns class labels to the remaining pixels (test set). The classification results look like this:

Final remarks

Results seem to suggest that unsupervised classification is more accurate than its supervised counterpart. However, supervised classification generally outperforms unsupervised classification. It is important to note that in the analyzed example accuracy could be dramatically improved by adjusting the parameters of the SVM classifier. Further improvement could be achieved by enlarging and refining the ground truth, since the train/test ratio is very small and the red and green patches actually contain pixels of different classes. Finally, one can reasonably expect that utilizing more sophisticated features such as ratios or indices computed from the intensity levels (for instance NDVI) would boost performance.