I hava a spark2.0.1 cluster with 1 Master(slaver1) and 2 worker(slaver2,slaver3),every machine has 2GB RAM.when I run the command

./bin/spark-shell --master spark://slaver1:7077 --executor-memory 500m

when I check the executor memory in the web (slaver1:4040/executors/). I found it is 110MB.

- 17,493

- 11

- 81

- 103

- 3

- 1

2 Answers

The memory you are talking about is Storage memory Actually Spark Divides the memory [Called Spark Memory] into 2 Region First is Storage Memory and Second is Execution Memory

The Total Memory can Be calculated by this Formula

(“Java Heap” – “Reserved Memory”) * spark.memory.fraction

Just to give you an overview Storage Memory is This pool is used for both storing Apache Spark cached data and for temporary space serialized data “unroll”. Also all the “broadcast” variables are stored there as cached blocks

If you want to check total memory provided you can go to Spark UI Spark-Master-Ip:8080[default port] in the start you can find Section called MEMORY that is total memory used by spark.

Thanks

- 2,284

- 1

- 20

- 40

-

this formula???Java Heap(500MB) * spark.memory.fraction(default 0.6) * spark.memory.storyfraction(default 0.5)=150MB – Weiguang Chen Apr 12 '17 at 02:54

-

this formula??? [Java Heap(500MB)-Reserved Memory(300MB)]*spark.memory.fraction(default 0.5)=100MB ≈ 110MB in my question – Weiguang Chen Apr 12 '17 at 03:06

-

this formula is from spark version 1.6 might changed little bit – Akash Sethi Apr 12 '17 at 04:55

-

1Your answer is very helpful to me – Weiguang Chen Apr 12 '17 at 06:46

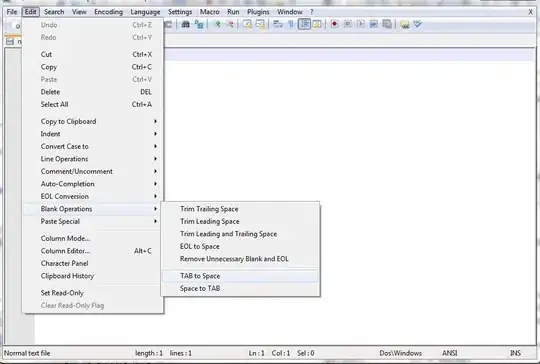

From Spark 1.6 version, The memory is divided according to the following picture

There is no hard boundary between execution and storage memory. The storage memory is required more then it takes from execution memory and viceversa. The Execution and storage memory is given by (ExecutorMemory-300Mb)* spark.memory.fraction

In your case (500-300)*).75 = 150mb there will be 3 to 5% error in Executor memory that is allocated.

300Mb is the reserved memory

User memory = (ExecutorMemory-300)*).(1-spark.memory.fraction).

In your case (500-300)*).25 = 50mb

- 3,780

- 23

- 40