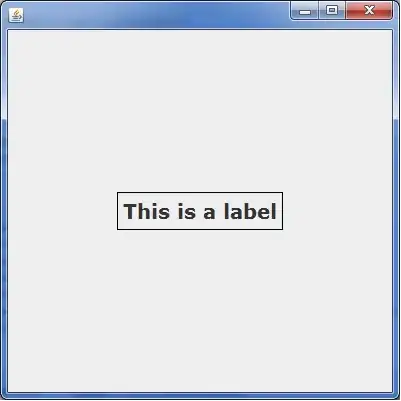

a pyspark.sql.DataFrame displays messy with DataFrame.show() - lines wrap instead of a scroll.

but displays with pandas.DataFrame.head

I tried these options

import IPython

IPython.auto_scroll_threshold = 9999

from IPython.core.interactiveshell import InteractiveShell

InteractiveShell.ast_node_interactivity = "all"

from IPython.display import display

but no luck. Although the scroll works when used within Atom editor with jupyter plugin: