As the title suggests, I'm processing several million tweets and one of the data points is whether or not any of the words exist in two different lists (each list contains about 500 words). It's understandably pretty slow, but I'll be doing this regularly so I'd like to speed it up. Any thoughts on how I could so?

lista = ['word1', 'word2', ... 'word500']

listb = ['word1', 'word2', ..., 'word500']

def token_list_count(df):

for i, t in df.iterrows():

list_a = 0

list_b = 0

for tok in t['tokens']:

if tok in lista: list_a += 1

elif tok in listb: list_b += 1

df.loc[i, 'token_count'] = int(len(t['tokens']))

df.loc[i, 'lista_count'] = int(list_a)

df.loc[i, 'listb_count'] = int(list_b)

if i % 25000 == 0: print('25k more processed...')

return df

Edit:

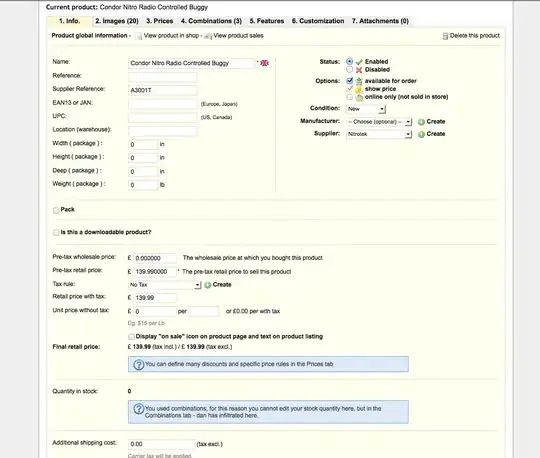

Input / Before:

Output / After: